Introduction

Creating an ETL pipeline with AWS Glue and Terraform can significantly streamline your data processing tasks. In this blog, we will walk you through the process of building an efficient AWS Glue ETL pipeline using Terraform. By the end of this guide, you’ll know how to set up AWS Glue to read data from an S3 bucket, process it with PySpark, and write the transformed data back to another S3 bucket. This step-by-step AWS Glue setup with Terraform ensures a seamless and automated ETL process, making your data management more effective and scalable.

Whether you’re new to AWS Glue or looking to optimize your existing ETL workflows, this tutorial will provide you with practical insights and best practices. We’ll cover everything from configuring Terraform for AWS Glue to setting up AWS Glue Data Catalog and creating AWS Glue jobs. By leveraging Terraform, you’ll be able to automate your AWS Glue ETL pipeline, reduce manual errors, and maintain consistent configurations across your environments. So, let’s dive into the world of serverless ETL and discover how AWS Glue and Terraform can transform your data processing capabilities.

Prerequisites

Before you begin, ensure you have the following:

AWS Account: You need an AWS account with permissions to create and manage S3 buckets, IAM roles, and AWS Glue resources.

Terraform: Install Terraform on your local machine.

Basic Knowledge: Familiarity with AWS services like S3, IAM, and AWS Glue, and a basic understanding of Terraform.

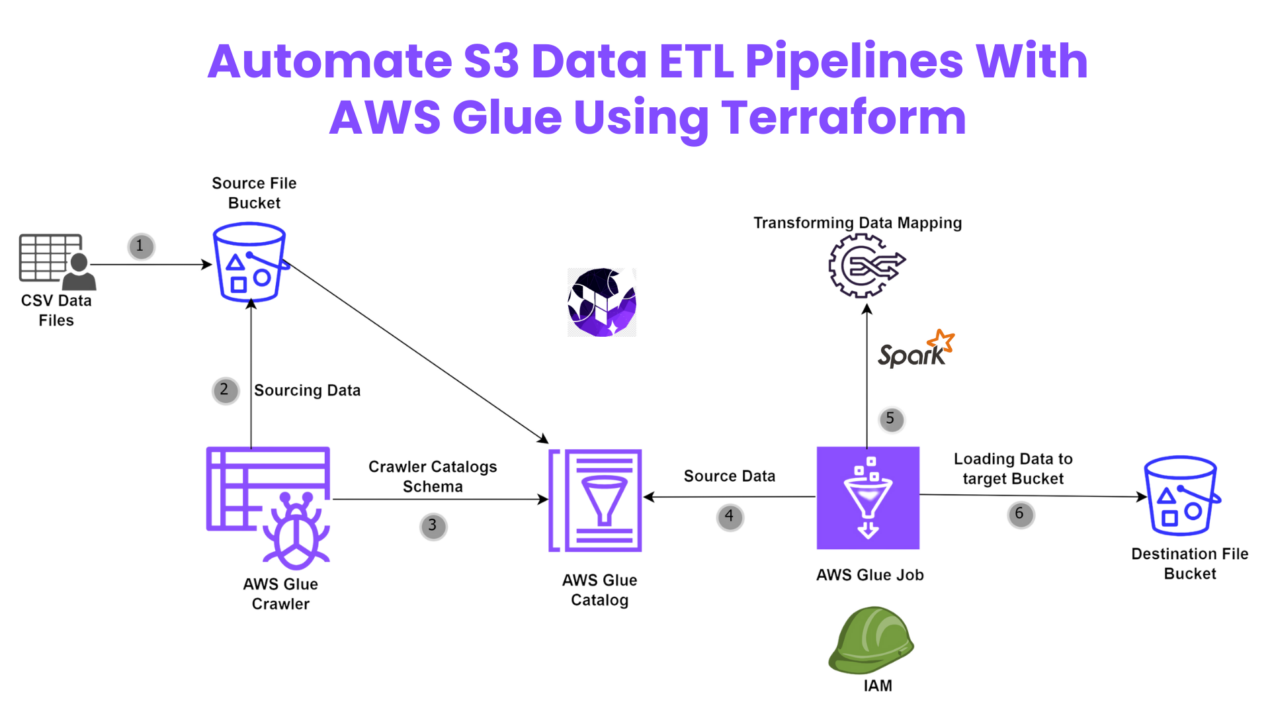

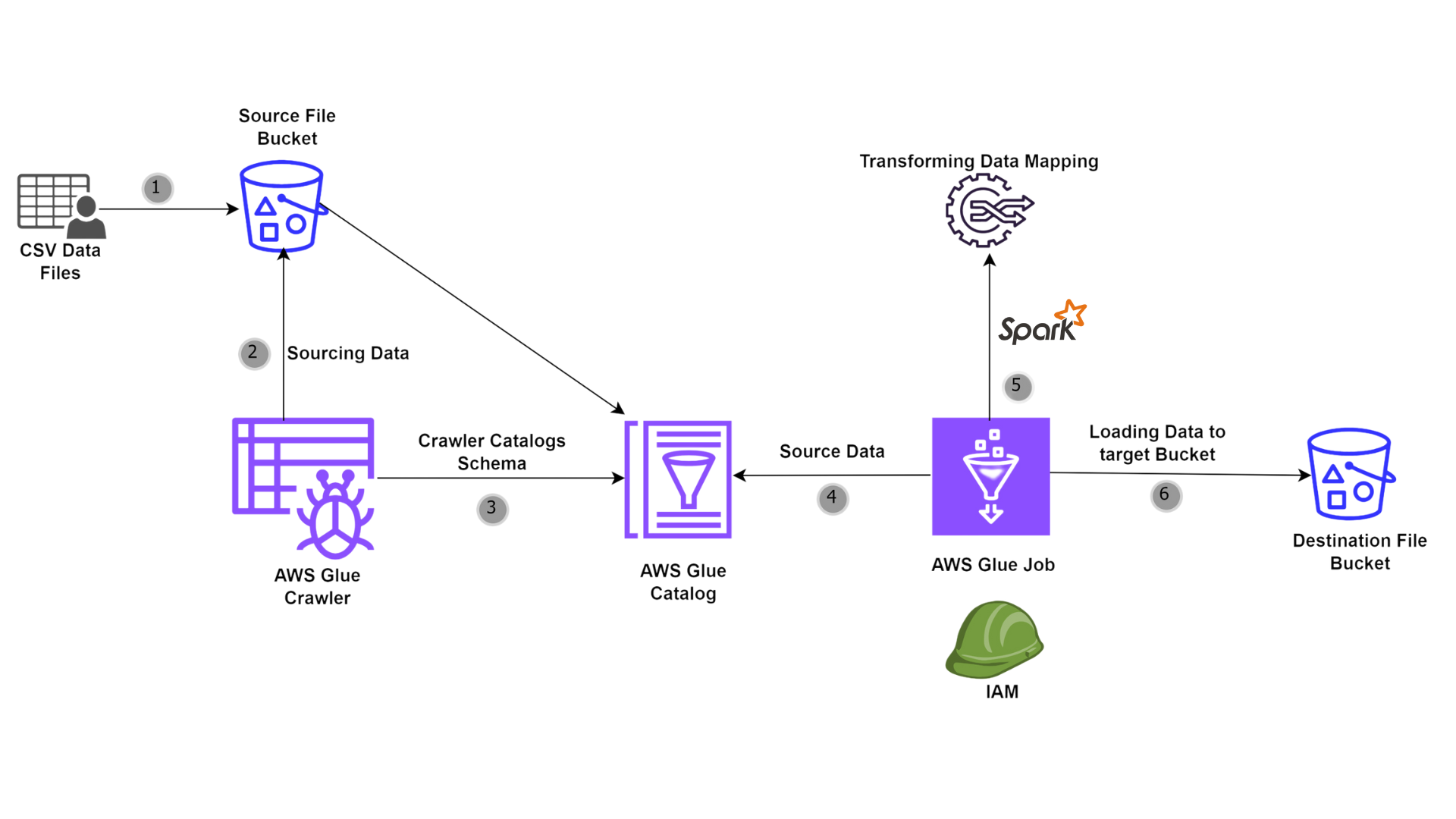

Diagrammatic Representation

AWS Resource Overview

AWS Resource Overview

Amazon S3

Amazon S3 (Simple Storage Service) is an object storage service that provides scalability, data availability, security, and performance. S3 allows you to store and retrieve any amount of data from anywhere on the web. In this tutorial, S3 will be used for storing the raw data, the processed data, and the scripts required for AWS Glue jobs.

AWS Glue

AWS Glue is a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning, and application development. It includes several components that work together to handle ETL processes efficiently.

AWS Glue Data Catalog

The Glue Data Catalog is a central metadata repository that stores table definitions, job definitions, and other control information to help manage the ETL process. It organizes metadata into databases and tables, making it easier for Glue jobs to discover and access data.

AWS Glue Crawler

A Glue Crawler is a service that scans data in your specified data stores (such as S3), determines the schema of the data, and populates the Glue Data Catalog with metadata. This automated process simplifies managing and updating schemas, ensuring that your ETL jobs have the most current information about the data structure.

AWS Glue Job

A Glue Job is a business logic container for the ETL process. It can be written in Python or Scala and leverages Apache Spark for distributed processing. The job reads data from the Glue Data Catalog, applies transformations, and writes the output to the specified destination.

AWS Glue PySpark Scripting

PySpark is the Python API for Apache Spark. AWS Glue leverages PySpark scripts to define the ETL process. These scripts handle data extraction, transformation, and loading operations using Spark’s powerful distributed data processing capabilities.

AWS IAM

AWS Identity and Access Management (IAM) allows you to manage access to AWS services and resources securely. We’ll create an IAM role that AWS Glue will assume to interact with other AWS services like S3. This role ensures that Glue has the necessary permissions to read from the source bucket, write to the destination bucket, and log activities.

Why This Glue Pipeline is Required

Building a data pipeline using AWS Glue and Terraform automates the ETL process, making data processing more efficient and reliable. This setup is essential for several reasons:

Scalability: AWS Glue handles large datasets and scales automatically, ensuring that your ETL processes can handle growing data volumes.

Automation: Terraform automates the creation and management of AWS resources, reducing the risk of manual errors and increasing deployment consistency.

Cost-Effectiveness: AWS Glue is serverless, meaning you only pay for the resources you use. It eliminates the need to manage infrastructure, saving time and reducing operational costs.

Flexibility: The pipeline can be easily extended or modified to accommodate new data sources, transformation logic, and output destinations.

Industry-Level Use Cases

Data Warehousing: Organizations can use AWS Glue to extract data from various sources, transform it, and load it into data warehouses like Amazon Redshift for reporting and analysis.

Data Lakes: AWS Glue helps in building and managing data lakes by cataloging and transforming data stored in S3, making it readily available for analytics and machine learning.

ETL for Business Intelligence: Businesses can automate their ETL processes to ensure that data is consistently processed and made available for BI tools like Amazon QuickSight, ensuring timely and accurate insights.

Log Processing: AWS Glue can process large volumes of log data, transforming and enriching it before storing it in S3 or Elasticsearch for monitoring and analysis.

Terraform Configuration Setup

To manage the AWS resources required for our ETL pipeline, we use Terraform. Terraform allows us to define our infrastructure as code, making it easier to manage and provision.

Setting Up Variables

Variables are essential for defining the configuration values that will be used throughout your Terraform scripts. These variables ensure that your configuration is flexible and reusable.

variable "aws_region" {

description = "AWS region to deploy resources"

default = "us-east-1"

}

variable "source_bucket" {

description = "S3 bucket for source data"

default = "dc-source-data-bucket"

}

variable "target_bucket" {

description = "S3 bucket for target data"

default = "dc-target-data-bucket"

}

variable "code_bucket" {

description = "S3 bucket for Glue job scripts"

default = "dc-poc-code-bucket"

}

Configuring the AWS Provider

The AWS provider allows Terraform to interact with AWS resources. Setting this up is crucial for ensuring that Terraform can create and manage the resources needed for our pipeline.

provider "aws" {

region = var.aws_region

}

Specifying Terraform Version

Specifying the required Terraform version and provider versions helps in maintaining consistency and avoiding compatibility issues.

terraform {

required_version = ">= 0.14"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 3.0"

}

}

}

Building Source and Destination Buckets

Creating S3 buckets for storing source data, processed data, and scripts is a foundational step. These buckets will act as the data storage points for our ETL pipeline.

Source Data Bucket

The source data bucket will hold the raw data files that need to be processed. This is the entry point for our data pipeline.

Target Data Bucket

The target data bucket will store the processed data after the ETL job completes. This is the final destination for the transformed data.

Code Bucket

The code bucket will store the scripts that AWS Glue uses to process the data. Keeping the scripts in an S3 bucket makes it easy to update and manage the ETL logic.

# S3 Bucket for Processed Data

resource "aws_s3_bucket" "dc-source-data-bucket" {

bucket = "dc-source-data-bucket"

}

resource "aws_s3_bucket_object" "data-object" {

bucket = aws_s3_bucket.dc-source-data-bucket.bucket

key = "organizations.csv"

source = "<Your Source file path>"

}

# S3 Bucket for Processed Data

resource "aws_s3_bucket" "dc-target-data-bucket" {

bucket = "dc-target-data-bucket"

}

# S3 Bucket for Processed Data

resource "aws_s3_bucket" "dc-poc-code-bucket" {

bucket = "dc-poc-code-bucket"

}

resource "aws_s3_bucket_object" "code-data-object" {

bucket = aws_s3_bucket.dc-poc-code-bucket.bucket

key = "script.py"

source = "<Your Source file path>"

}

Building the AWS Glue Service Role

Creating an IAM role for AWS Glue is crucial for enabling Glue to interact with other AWS services. This role must have permissions to read from the source bucket, write to the target bucket, and log activities.

Role Configuration

The IAM role configuration involves defining policies that grant AWS Glue the necessary permissions. These permissions include accessing S3 buckets, interacting with Glue components, and logging to CloudWatch.

resource "aws_iam_role" "glue_service_role" {

name = "glue_service_role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "glue.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

resource "aws_iam_role_policy" "glue_service_role_policy" {

name = "glue_service_role_policy"

role = aws_iam_role.glue_service_role.name

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"glue:*",

"s3:GetBucketLocation",

"s3:ListBucket",

"s3:ListAllMyBuckets",

"s3:GetBucketAcl",

"ec2:DescribeVpcEndpoints",

"ec2:DescribeRouteTables",

"ec2:CreateNetworkInterface",

"ec2:DeleteNetworkInterface",

"ec2:DescribeNetworkInterfaces",

"ec2:DescribeSecurityGroups",

"ec2:DescribeSubnets",

"ec2:DescribeVpcAttribute",

"iam:ListRolePolicies",

"iam:GetRole",

"iam:GetRolePolicy",

"cloudwatch:PutMetricData"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": ["s3:CreateBucket"],

"Resource": ["arn:aws:s3:::aws-glue-*"]

},

{

"Effect": "Allow",

"Action": ["s3:GetObject", "s3:PutObject", "s3:DeleteObject"],

"Resource": [

"arn:aws:s3:::*/*",

"arn:aws:s3:::*/*aws-glue-*/*"

]

},

{

"Effect": "Allow",

"Action": ["s3:GetObject"],

"Resource": [

"arn:aws:s3:::crawler-public*",

"arn:aws:s3:::aws-glue-*"

]

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": ["arn:aws:logs:*:*:*:/aws-glue/*"]

},

{

"Effect": "Allow",

"Action": ["ec2:CreateTags", "ec2:DeleteTags"],

"Condition": {

"ForAllValues:StringEquals": {

"aws:TagKeys": ["aws-glue-service-resource"]

}

},

"Resource": [

"arn:aws:ec2:*:*:network-interface/*",

"arn:aws:ec2:*:*:security-group/*",

"arn:aws:ec2:*:*:instance/*"

]

}

]

}

EOF

}Building AWS Glue Catalog and Crawler

The Glue Data Catalog and Crawler automate the process of discovering and cataloging data schemas. This step is essential for ensuring that your ETL jobs have accurate and up-to-date metadata.

Glue Catalog Database

Creating a database in the Glue Data Catalog helps organize and store metadata about your data. This database acts as a central repository for all metadata related to your ETL process.

Glue Crawler

The Glue Crawler scans the source data bucket, infers the schema, and populates the Glue Data Catalog with metadata. This automation simplifies managing schema changes and ensures that your ETL jobs always have current information.

# Create Glue Data Catalog Database

resource "aws_glue_catalog_database" "org_report_database" {

name = "org-report"

location_uri = "${aws_s3_bucket.dc-source-data-bucket.id}/"

}

# Create Glue Crawler

resource "aws_glue_crawler" "org_report_crawler" {

name = "org-report-crawler"

database_name = aws_glue_catalog_database.org_report_database.name

role = aws_iam_role.glue_service_role.name

s3_target {

path = "${aws_s3_bucket.dc-source-data-bucket.id}/"

}

schema_change_policy {

delete_behavior = "LOG"

}

configuration = <<EOF

{

"Version":1.0,

"Grouping": {

"TableGroupingPolicy": "CombineCompatibleSchemas"

}

}

EOF

}

resource "aws_glue_trigger" "org_report_trigger" {

name = "org-report-trigger"

type = "ON_DEMAND"

actions {

crawler_name = aws_glue_crawler.org_report_crawler.name

}

}

Building AWS Glue Job and PySpark Script

The Glue job defines the ETL process, while the PySpark script contains the logic for transforming the data.

PySpark Script

The PySpark script contains the ETL logic. It initializes the Glue context, reads data from the Glue Data Catalog, applies transformations, and writes the output to the target bucket. This script is crucial for defining how the data is processed and transformed.

import sys

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

# Initialize SparkContext, GlueContext, and SparkSession

sc = SparkContext()

glueContext = GlueContext(sc)

spark = glueContext.spark_session

# Get job arguments

args = getResolvedOptions(sys.argv, ['JOB_NAME'])

# Create Glue job

job = Job(glueContext)

job.init(args['JOB_NAME'], args)

# Script generated for node AWS Glue Data Catalog

AWSGlueDataCatalog_node1704102689282 = glueContext.create_dynamic_frame.from_catalog(

database="org-report",

table_name="dc_source_data_bucket",

transformation_ctx="AWSGlueDataCatalog_node1704102689282",

)

# Script generated for node Change Schema

ChangeSchema_node1704102716061 = ApplyMapping.apply(

frame=AWSGlueDataCatalog_node1704102689282,

mappings=[

("index", "long", "index", "int"),

("organization id", "string", "organization id", "string"),

("name", "string", "name", "string"),

("website", "string", "website", "string"),

("country", "string", "country", "string"),

("description", "string", "description", "string"),

("founded", "long", "founded", "long"),

("industry", "string", "industry", "string"),

("number of employees", "long", "number of employees", "long"),

],

transformation_ctx="ChangeSchema_node1704102716061",

)

# Script generated for node Amazon S3

AmazonS3_node1704102720699 = glueContext.write_dynamic_frame.from_options(

frame=ChangeSchema_node1704102716061,

connection_type="s3",

format="csv",

connection_options={"path": "s3://dc-target-data-bucket", "partitionKeys": []},

transformation_ctx="AmazonS3_node1704102720699",

)

job.commit()

Glue Job

The Glue job specifies the script to run, the IAM role to assume, the Glue version, the worker type, and other configuration settings. This job orchestrates the ETL process, reading data from the source, applying transformations, and writing the results to the target bucket.

resource "aws_glue_job" "glue_job" {

name = "poc-glue-job"

role_arn = aws_iam_role.glue_service_role.arn

description = "Transfer csv from source to bucket"

glue_version = "4.0"

worker_type = "G.1X"

timeout = 2880

max_retries = 1

number_of_workers = 2

command {

name = "glueetl"

python_version = 3

script_location = "s3://${aws_s3_bucket.dc-poc-code-bucket.id}/script.py"

}

default_arguments = {

"--enable-auto-scaling" = "true"

"--enable-continous-cloudwatch-log" = "true"

"--datalake-formats" = "delta"

"--source-path" = "s3://${aws_s3_bucket.dc-source-data-bucket.id}/" # Specify the source S3 path

"--destination-path" = "s3://${aws_s3_bucket.dc-target-data-bucket.id}/" # Specify the destination S3 path

"--job-name" = "poc-glue-job"

"--enable-continuous-log-filter" = "true"

"--enable-metrics" = "true"

}

}

output "glue_crawler_name" {

value = "s3//${aws_s3_bucket.dc-source-data-bucket.id}/"

}🔊To view the entire GitHub code click here

1️⃣ The terraform fmt command is used to rewrite Terraform configuration files to a canonical format and style👨💻.

terraform fmt

2️⃣ Initialize the working directory by running the command below. The initialization includes installing the plugins and providers necessary to work with resources. 👨💻

terraform init

3️⃣ Create an execution plan based on your Terraform configurations. 👨💻

terraform plan

4️⃣ Execute the execution plan that the terraform plan command proposed. 👨💻

terraform apply -auto-approve👁🗨👁🗨 YouTube Tutorial 📽

🥁🥁 Conclusion 🥁🥁

In conclusion, setting up an AWS Glue ETL pipeline with Terraform not only automates your data processing tasks but also ensures scalability and efficiency. By following this guide, you’ve learned how to read data from S3, transform it using PySpark, and write the results back to S3. We’ve covered best practices for AWS Glue ETL jobs, including configuring the Glue Data Catalog and setting up Glue crawlers. With Terraform, you can easily manage and deploy these resources, making your ETL process more reliable and repeatable.

AWS Glue offers powerful tools for large data sets, and by integrating it with Terraform, you can optimize your workflows and enhance performance. Whether you’re handling schema changes, securing your ETL processes with IAM roles, or monitoring jobs with CloudWatch, AWS Glue and Terraform provide a comprehensive solution for your data transformation needs. With this knowledge, you’re now equipped to tackle real-world ETL challenges and take full advantage of serverless data processing.

Happy automating! 😊

📢 Stay tuned for my next blog…..

So, did you find my content helpful? If you did or like my other content, feel free to buy me a coffee. Thanks

Author - Dheeraj Choudhary

RELATED ARTICLES

Automate S3 Data ETL Pipelines With AWS Glue Using Terraform

Discover how to automate your S3 data ETL pipelines using AWS Glue and Terraform in this step-by-step tutorial. Learn to efficiently manage and process your data, leveraging the power of AWS Glue for seamless data transformation. Follow along as we demonstrate how to set up Terraform scripts, configure AWS Glue, and automate data workflows.

Automating AWS Infrastructure with Terraform Functions

IntroductionManaging cloud infrastructure can be complex and time-consuming. Terraform, an open-source Infrastructure as Code (IaC) tool, si ...

Deploying a Serverless Python Flask App on AWS ECS Fargate Using Terraform

Learn how to deploy a serverless Python Flask app on AWS ECS Fargate using Terraform. This step-by-step guide covers everything from setting up your AWS environment to writing Terraform scripts and managing your Flask application.

Build AWS Webteir With Terraform | DevOps Project

Learn how to deploy and manage your AWS Webteir infrastructure efficiently using Terraform. This comprehensive guide covers everything you need to know, from setting up your AWS environment to deploying applications and optimizing performance. Get expert tips, best practices, and troubleshooting advice to streamline your AWS Webteir deployment process and unlock the full potential of cloud computing for your business.