Welcome back to the series of Deploying On AWS Cloud Using Terraform 👨🏻💻. In this entire series, we will focus on our core concepts of Terraform by launching important basic services from scratch which will take your infra-as-code journey from beginner to advanced. This series would start from beginner to advance with real life Usecases and Youtube Tutorials.

If you are a beginner for Terraform and want to start your journey towards infra-as-code developer as part of your devops role buckle up 🚴♂️ and lets get started and understand core Terraform concepts by implementing it…🎬

🔎Basic Terraform Configurations🔍

As part of basic configuration we are going to setup 3 terraform files

1. Providers File:- Terraform relies on plugins called “providers” to interact with cloud providers, SaaS providers, and other APIs.

Providers are distributed separately from Terraform itself, and each provider has its own release cadence and version numbers.

The Terraform Registry is the main directory of publicly available Terraform providers, and hosts providers for most major infrastructure platforms. Each provider has its own documentation, describing its resource types and their arguments.

We would be using AWS Provider for our terraform series. Make sure to refer Terraform AWS documentation for up-to-date information.

Provider documentation in the Registry is versioned; you can use the version menu in the header to change which version you’re viewing.

provider "aws" {

region = "var.AWS_REGION"

shared_credentials_file = ""

}

2. Variables File:- Terraform variables lets us customize aspects of Terraform modules without altering the module’s own source code. This allows us to share modules across different Terraform configurations, reusing same data at multiple places.

When you declare variables in the root terraform module of your configuration, you can set their values using CLI options and environment variables. When you declare them in child modules, the calling module should pass values in the module block.

variable "AWS_REGION" {

default = "us-east-1"

}

#-------------------------Fetch VPC ID---------------------------------

data "aws_vpc" "GetVPC" {

filter {

name = "tag:Name"

values = ["CustomVPC"]

}

}

#-------------------------Variables For Autoscaling---------------------

variable "instance_type" {

type = string

default = "t2.micro"

}

variable "autoscaling_group_min_size" {

type = number

default = 2

}

variable "autoscaling_group_max_size" {

type = number

default = 3

}

variable "aws_key_pair" {

type = string

default =

}

#-------------------------Fetch Public Subnets List----------------------

data "aws_subnet_ids" "GetSubnet_Ids" {

vpc_id = data.aws_vpc.GetVPC.id

filter {

name = "tag:Type"

values = ["Public"]

}

}

data "aws_subnet" "GetSubnet" {

count = "${length(data.aws_subnet_ids.GetSubnet_Ids.ids)}"

id = "${tolist(data.aws_subnet_ids.GetSubnet_Ids.ids)[count.index]}"

}

#-------------------------Fetch Target Group ARN----------------------

data "aws_lb_target_group" "elb_tg" {

arn = var.elb_tg_arn

}

3. Versions File:- It’s always a best practice to maintain a version file where you specific version based on which your stack is testing and live on production.

terraform {

required_version = ">= 0.12"

}

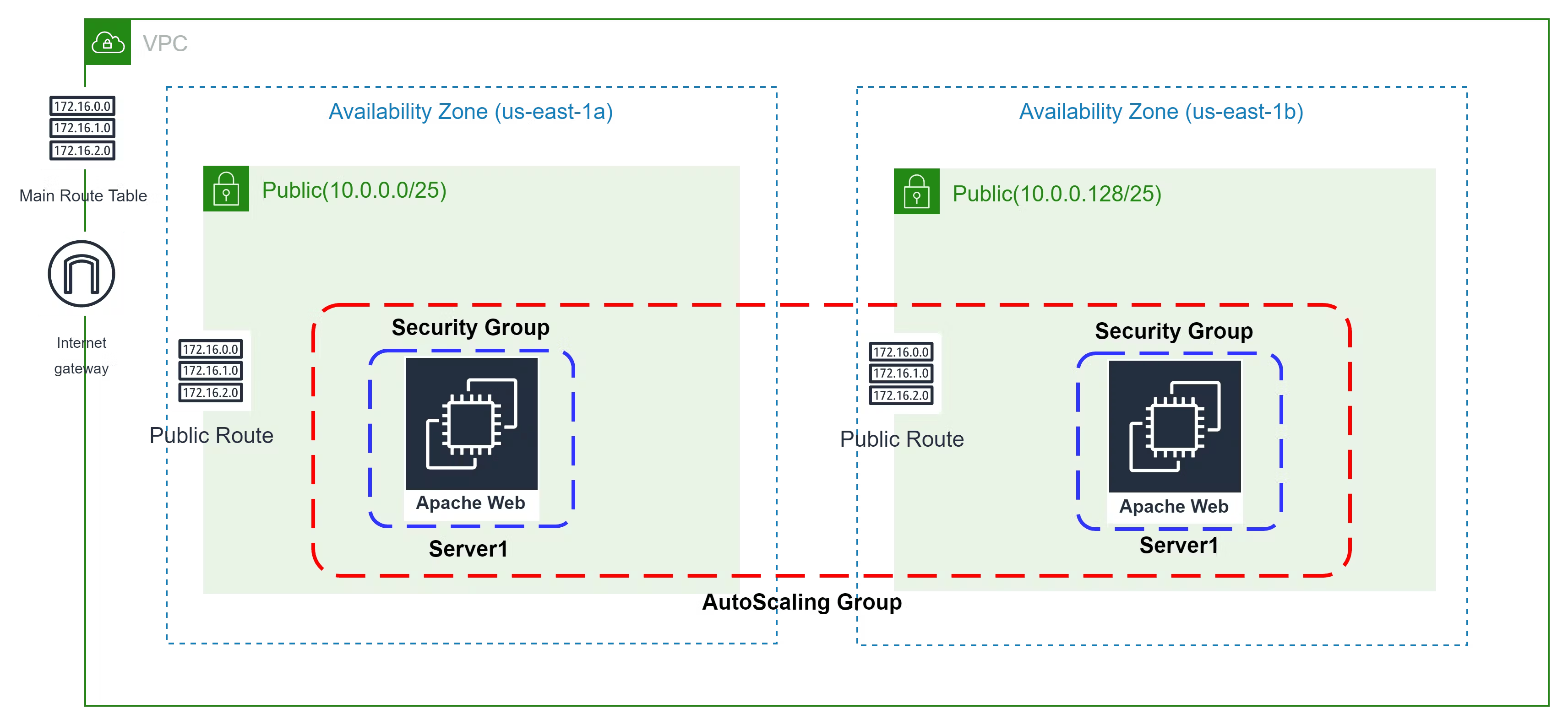

🎨 Diagrammatic Representation 🎨

Configure Security Group For Launch Configuration

The method acts as a virtual firewall to control your inbound and outbound traffic flowing in and out.

🔳 Resource

✦ aws_security_group:- This resource is define traffic inbound and outbound rules on the subnet level.

🔳 Arguments

✦ name:- This is an optional argument to define the name of the security group.

✦ description:- This is an optional argument to mention details about the security group that we are creating.

✦ vpc_id:- This is a mandatory argument and refers to the id of a VPC to which it would be associated.

✦ tags:- One of the most important property used in all resources. Always make sure to attach tags for all your resources.

EGRESS & INGRESS are processed in attribute-as-blocks mode.

resource "aws_security_group" "asg_sg" {

name = "ASG_Allow_Traffic"

description = "Allow all inbound traffic for asg"

vpc_id = data.aws_vpc.GetVPC.id

ingress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 8

to_port = 0

protocol = "icmp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "terraform-asg-security-group"

}

}

👨🏻💻Deploy Launch Config and AutoScaling group👨🏻💻

Before creating the Autoscaling group let’s first define the launch configuration for EC2 instances that will be used by autoscaling later.

🔳 Resource

✦ aws_launch_configuration:- This resource creates a launch configuration for EC2 instances that we are going to deploy as part of our autoscaling group.

🔳 Arguments

✦ name_prefix:- This is an optional argument to create a unique name beginning with the specified prefix.

✦ image_id:- This is an mandatory argument to mention image_id id based on which EC2 instance will be launched.

✦ instance_type:- This is a mandatory argument to mention instance type for the EC2 instances like t2.small, t2.micro etc.

✦ key_name:- This is an optional argument to enable ssh connection to your EC2 instance.

✦ security_groups:- This is an optional argument to mention which controls your inbound and outbound traffic flowing to your EC2 instances inside a subnet.

✦ user_data:- This is an optional argument to provide commands or scripts to be executed during the launch of the EC2 instance.

✦ Lifecycle:- Lifecycle is a nested block that can appear within a resource block.

create_before_destroy:- when Terraform must change a resource argument that cannot be updated in place due to remote API limitations, Terraform will instead destroy the existing object and then create a new replacement object with the newly configured arguments.

resource "aws_launch_configuration" "launch_config_dev" {

name_prefix = "webteir_dev"

image_id = "ami-0742b4e673072066f"

instance_type = "${var.instance_type}"

key_name = "${var.aws_key_pair}"

security_groups = ["${aws_security_group.asg_sg.id}"]

associate_public_ip_address = true

user_data = <Deployed EC2 Using ASG" | sudo tee /var/www/html/index.html

EOF

lifecycle {

create_before_destroy = true

}

}

🔳 Resource

✦ aws_autoscaling_group:- This resource group resources for use so that it can be associated with load balancers.

🔳 Arguments

✦ launch_configuration:- This is an optional argument to mention name of the launch configuration to be used.

✦ min_size:- This is a mandatory argument to define the minimum size of the Autoscaling group.

✦ max_size:-This is a mandatory argument to define the maximum size of the Autoscaling group.

✦ target_group_arns:- This is an optional argument to define the target group arn to which EC2 can register.

✦ vpc_zone_identifier:- This is an optional argument to define a list of subnet IDs to launch resources in.

✦ tags:- One of the most important property used in all resources. Always make sure to attach tags for all your resources.

resource "aws_autoscaling_group" "autoscaling_group_dev" {

launch_configuration = "${aws_launch_configuration.launch_config_dev.id}"

min_size = "${var.autoscaling_group_min_size}"

max_size = "${var.autoscaling_group_max_size}"

target_group_arns = ["${data.aws_lb_target_group.elb_tg.arn}"]

vpc_zone_identifier = "${data.aws_subnet.GetSubnet.*.id}"

tag {

key = "Name"

value = "autoscaling-group-dev"

propagate_at_launch = true

}

}

🔳 Output File

Output values make information about your infrastructure available on the command line, and can expose information for other Terraform configurations to use. Output values are similar to return values in programming languages.

output "asg_sg" {

value = aws_security_group.asg_sg.id

description = "This is Security Group for autoscaling launch configuration."

}

output "aws_launch_configuration" {

value = aws_launch_configuration.launch_config_dev.id

description = "This is ASG Launch Configuration ID."

}

output "autoscaling_group_dev" {

value = aws_autoscaling_group.autoscaling_group_dev.id

description = "This is ASG ID."

}

🔊To view the entire GitHub code click here

1️⃣ The terraform fmt command is used to rewrite Terraform configuration files to a canonical format and style👨💻.

terraform fmt

2️⃣ Initialize the working directory by running the command below. The initialization includes installing the plugins and providers necessary to work with resources. 👨💻

terraform init

3️⃣ Create an execution plan based on your Terraform configurations. 👨💻

terraform plan

4️⃣ Execute the execution plan that the terraform plan command proposed. 👨💻

terraform apply --auto-approve

👁🗨👁🗨 YouTube Tutorial 📽

❗️❗️Important Documentation❗️❗️

⛔️ Hashicorp Terraform

⛔️ AWS CLI

⛔️ Hashicorp Terraform Extension Guide

⛔️ Terraform Autocomplete Extension Guide

⛔️ AWS Security Group

⛔️ AWS Launch Configuration

⛔️ AWS Autoscaling Group

⛔️ Lifecycle Meta-Argument

🥁🥁 Conclusion 🥁🥁

In this blog, we have configured the below resources

✦ AWS Security Group for the ASG Launch Configuration.

✦ AWS Launch Configuration.

✦ AWS Autoscaling Group.

I have also referenced what arguments and documentation we are going to use so that while you are writing the code it would be easy for you to understand terraform official documentation. Stay with me for the next blog.

📢 Stay tuned for my next blog…..

So, did you find my content helpful? If you did or like my other content, feel free to buy me a coffee. Thanks.

Author - Dheeraj Choudhary

RELATED ARTICLES

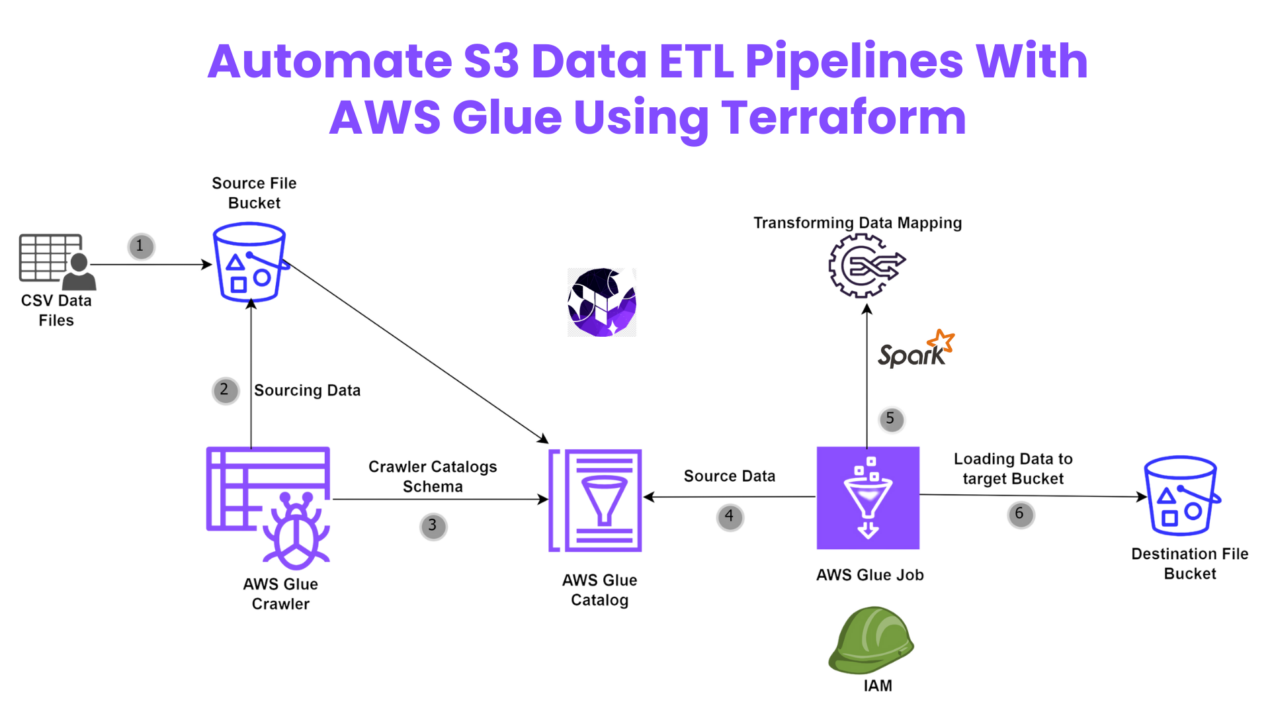

Automate S3 Data ETL Pipelines With AWS Glue Using Terraform

Discover how to automate your S3 data ETL pipelines using AWS Glue and Terraform in this step-by-step tutorial. Learn to efficiently manage and process your data, leveraging the power of AWS Glue for seamless data transformation. Follow along as we demonstrate how to set up Terraform scripts, configure AWS Glue, and automate data workflows.

Automating AWS Infrastructure with Terraform Functions

IntroductionManaging cloud infrastructure can be complex and time-consuming. Terraform, an open-source Infrastructure as Code (IaC) tool, si ...

Hi there, just became alert to your blog through Google,

and found that it’s truly informative. I’m gonna

watch out for brussels. I will be grateful if you continue this in future.

Numerous people will be benefited from your writing.

Cheers! Escape rooms hub

Very interesting topic, appreciate it for putting up..

Really great info can be found on site.Blog monetyze

Excellent blog you have here.. It’s hard to find good quality writing like yours these days. I really appreciate individuals like you! Take care!!

Very good info. Lucky me I came across your site by chance (stumbleupon). I have book marked it for later!

I seriously love your website.. Great colors & theme. Did you create this site yourself? Please reply back as I’m looking to create my own blog and would like to know where you got this from or exactly what the theme is named. Kudos.

Way cool! Some extremely valid points! I appreciate you writing this article plus the rest of the website is really good.

Excellent blog you have here.. It’s difficult to find excellent writing like yours these days. I truly appreciate individuals like you! Take care!!

Pretty! This was an extremely wonderful post. Many thanks for supplying this info.

This site really has all the info I needed concerning this subject and didn’t know who to ask.

Great information. Lucky me I found your website by chance (stumbleupon). I’ve bookmarked it for later.

This website truly has all the information I needed about this subject and didn’t know who to ask.

Spot on with this write-up, I really believe that this amazing site needs far more attention. I’ll probably be back again to read more, thanks for the info!

Having read this I believed it was really informative. I appreciate you taking the time and energy to put this content together. I once again find myself spending way too much time both reading and commenting. But so what, it was still worthwhile.

bookmarked!!, I like your site.

Very good write-up. I certainly appreciate this site. Keep writing!

Spot on with this write-up, I truly believe this web site needs much more attention. I’ll probably be returning to see more, thanks for the info!

Aw, this was an exceptionally good post. Spending some time and actual effort to produce a great article… but what can I say… I hesitate a whole lot and never seem to get anything done.

I’m very happy to find this web site. I need to to thank you for ones time just for this wonderful read!! I definitely liked every part of it and I have you bookmarked to check out new things on your site.

Your posts in this blog really shine! Glad to gain some new insights, which I happen to also cover on my page. Feel free to visit my webpage UY5 about Thai-Massage and any tip from you will be much apreciated.

Greetings! Very useful advice in this particular post! It’s the little changes that produce the most important changes. Thanks for sharing!

Hi! I just want to offer you a big thumbs up for the great info you have got right here on this post. I am returning to your site for more soon.

Very good article. I am facing some of these issues as well..

Howdy! I could have sworn I’ve been to this web site before but after looking at many of the posts I realized it’s new to me. Nonetheless, I’m certainly pleased I found it and I’ll be bookmarking it and checking back frequently.

Hi there, There’s no doubt that your web site could be having browser compatibility issues. When I look at your site in Safari, it looks fine however, when opening in I.E., it’s got some overlapping issues. I merely wanted to provide you with a quick heads up! Aside from that, fantastic website.

Having read this I thought it was extremely informative. I appreciate you spending some time and energy to put this article together. I once again find myself personally spending a significant amount of time both reading and commenting. But so what, it was still worth it.

You’ve made some good points there. I looked on the web to find out more about the issue and found most people will go along with your views on this site.

This site was… how do I say it? Relevant!! Finally I’ve found something that helped me. Thanks a lot.

After checking out a handful of the blog articles on your blog, I seriously like your technique of blogging. I book marked it to my bookmark webpage list and will be checking back soon. Please check out my web site too and let me know your opinion.

An outstanding share! I’ve just forwarded this onto a colleague who has been doing a little homework on this. And he in fact bought me dinner due to the fact that I stumbled upon it for him… lol. So let me reword this…. Thank YOU for the meal!! But yeah, thanx for spending some time to talk about this subject here on your site.

You made some really good points there. I checked on the web to find out more about the issue and found most individuals will go along with your views on this web site.

This website was… how do I say it? Relevant!! Finally I have found something which helped me. Many thanks.

This page certainly has all of the information and facts I needed concerning this subject and didn’t know who to ask.

Hi, I do think this is a great blog. I stumbledupon it 😉 I will come back once again since i have book-marked it. Money and freedom is the greatest way to change, may you be rich and continue to guide others.

I could not refrain from commenting. Perfectly written!

Way cool! Some extremely valid points! I appreciate you writing this post plus the rest of the website is extremely good.

Everything is very open with a precise clarification of the challenges. It was truly informative. Your website is very helpful. Thanks for sharing!

I love it when people come together and share thoughts. Great site, stick with it!

I would like to thank you for the efforts you’ve put in writing this blog. I’m hoping to check out the same high-grade blog posts by you in the future as well. In fact, your creative writing abilities has inspired me to get my very own website now 😉

An impressive share! I’ve just forwarded this onto a colleague who was conducting a little research on this. And he in fact ordered me lunch because I discovered it for him… lol. So allow me to reword this…. Thanks for the meal!! But yeah, thanks for spending time to discuss this issue here on your web site.

I blog frequently and I genuinely appreciate your information. This great article has really peaked my interest. I’m going to book mark your site and keep checking for new information about once a week. I subscribed to your RSS feed too.

Greetings! Very useful advice in this particular post! It’s the little changes that will make the largest changes. Thanks for sharing!

Great article! We will be linking to this particularly great post on our website. Keep up the good writing.

I was very pleased to uncover this site. I wanted to thank you for ones time just for this fantastic read!! I definitely really liked every part of it and i also have you book marked to see new stuff on your site.

A fascinating discussion is worth comment. I do believe that you need to publish more about this subject, it might not be a taboo subject but typically people do not discuss these subjects. To the next! Many thanks.

I seriously love your site.. Very nice colors & theme. Did you create this web site yourself? Please reply back as I’m attempting to create my own personal website and want to know where you got this from or what the theme is called. Kudos.

It’s hard to come by experienced people for this topic, but you sound like you know what you’re talking about! Thanks

Howdy! This post could not be written any better! Reading through this post reminds me of my previous roommate! He constantly kept talking about this. I’ll send this post to him. Pretty sure he’s going to have a good read. I appreciate you for sharing!

Great info. Lucky me I recently found your blog by accident (stumbleupon). I’ve bookmarked it for later!

Very good post! We will be linking to this particularly great content on our website. Keep up the great writing.

An impressive share! I’ve just forwarded this onto a coworker who was conducting a little homework on this. And he actually bought me lunch because I stumbled upon it for him… lol. So let me reword this…. Thanks for the meal!! But yeah, thanx for spending some time to discuss this issue here on your website.

I blog often and I really thank you for your content. The article has really peaked my interest. I’m going to bookmark your site and keep checking for new information about once a week. I subscribed to your Feed too.

Greetings! Very helpful advice within this post! It is the little changes which will make the largest changes. Thanks a lot for sharing!

Good article. I am experiencing some of these issues as well..

After I initially left a comment I appear to have clicked the -Notify me when new comments are added- checkbox and from now on whenever a comment is added I get four emails with the exact same comment. Perhaps there is an easy method you can remove me from that service? Many thanks.

Aw, this was an incredibly good post. Taking the time and actual effort to make a good article… but what can I say… I hesitate a lot and never manage to get anything done.

Having read this I thought it was very enlightening. I appreciate you spending some time and energy to put this article together. I once again find myself spending way too much time both reading and leaving comments. But so what, it was still worth it!

I wanted to thank you for this fantastic read!! I absolutely loved every bit of it. I’ve got you bookmarked to look at new stuff you post…

Oh my goodness! Impressive article dude! Many thanks, However I am encountering difficulties with your RSS. I don’t understand the reason why I can’t subscribe to it. Is there anybody getting similar RSS issues? Anyone that knows the solution can you kindly respond? Thanks.

Aw, this was a really good post. Taking a few minutes and actual effort to produce a really good article… but what can I say… I hesitate a whole lot and don’t seem to get anything done.

To the dheeraj3choudhary.com admin, Your posts are always a great source of knowledge.

This website was… how do I say it? Relevant!! Finally I’ve found something which helped me. Kudos!

This web site certainly has all of the information I needed about this subject and didn’t know who to ask.

Everything is very open with a clear description of the challenges. It was definitely informative. Your site is very helpful. Many thanks for sharing.

Hi, I do think this is an excellent site. I stumbledupon it 😉 I’m going to revisit yet again since I bookmarked it. Money and freedom is the best way to change, may you be rich and continue to guide other people.

You made some really good points there. I looked on the web to learn more about the issue and found most people will go along with your views on this web site.

Hi dheeraj3choudhary.com owner, Good work!

May I simply just say what a comfort to find somebody who genuinely understands what they’re discussing online. You actually understand how to bring a problem to light and make it important. A lot more people need to check this out and understand this side of the story. I can’t believe you’re not more popular given that you definitely possess the gift.

I was pretty pleased to discover this site. I wanted to thank you for your time for this particularly wonderful read!! I definitely enjoyed every bit of it and i also have you bookmarked to see new things on your web site.

Everything is very open with a really clear explanation of the issues. It was truly informative. Your site is very useful. Many thanks for sharing.

Everything is very open with a precise description of the issues. It was truly informative. Your site is very helpful. Many thanks for sharing.

Having read this I believed it was very enlightening. I appreciate you spending some time and energy to put this information together. I once again find myself spending way too much time both reading and leaving comments. But so what, it was still worth it!

I truly love your site.. Pleasant colors & theme. Did you develop this amazing site yourself? Please reply back as I’m planning to create my own personal website and would love to know where you got this from or exactly what the theme is named. Many thanks.

You should take part in a contest for one of the most useful blogs on the web. I most certainly will recommend this site!

I needed to thank you for this good read!! I certainly enjoyed every bit of it. I’ve got you saved as a favorite to check out new things you post…

You have made some good points there. I checked on the net for additional information about the issue and found most people will go along with your views on this web site.

I read this article. I think You put a great deal of exertion to make this article. I like your work. 스포츠 중계

Really nice and interesting post. I was looking for this kind of information and enjoyed reading this one. Keep posting. Thanks for sharing 스포츠중계

Howdy, I believe your website may be having browser compatibility issues. When I take a look at your site in Safari, it looks fine but when opening in I.E., it has some overlapping issues. I just wanted to provide you with a quick heads up! Besides that, fantastic website.

Great!!! Thank you for sharing this details. If you need some information about Entrepreneurs than have a look here 59N

Everything is very open with a really clear clarification of the challenges. It was truly informative. Your site is very helpful. Thanks for sharing!

Great post! We will be linking to this great article on our website. Keep up the great writing.

bookmarked!!, I like your web site!

This site truly has all of the information I wanted concerning this subject and didn’t know who to ask.

I’m amazed, I must say. Seldom do I encounter a blog that’s both equally educative and entertaining, and let me tell you, you have hit the nail on the head. The problem is an issue that too few men and women are speaking intelligently about. I am very happy that I came across this in my hunt for something regarding this.

Oh my goodness! Incredible article dude! Many thanks, However I am having problems with your RSS. I don’t understand the reason why I cannot subscribe to it. Is there anybody else having similar RSS issues? Anybody who knows the answer will you kindly respond? Thanx!

You’ve made some decent points there. I looked on the web to find out more about the issue and found most individuals will go along with your views on this website.

Pretty! This has been an extremely wonderful article. Thanks for providing these details.

That is a really good tip especially to those fresh to the blogosphere. Brief but very precise information… Many thanks for sharing this one. A must read article!

I blog often and I really appreciate your content. This article has really peaked my interest. I am going to take a note of your website and keep checking for new details about once a week. I subscribed to your Feed too.

I blog often and I really thank you for your information. This article has really peaked my interest. I’m going to take a note of your site and keep checking for new information about once a week. I subscribed to your Feed too.

Can I simply just say what a relief to discover somebody that actually knows what they are discussing online. You certainly know how to bring a problem to light and make it important. More and more people must look at this and understand this side of the story. I was surprised you’re not more popular given that you certainly possess the gift.

An outstanding share! I’ve just forwarded this onto a co-worker who was conducting a little research on this. And he actually bought me breakfast simply because I stumbled upon it for him… lol. So let me reword this…. Thank YOU for the meal!! But yeah, thanx for spending some time to discuss this topic here on your site.

You’re so cool! I don’t believe I’ve read through something like this before. So nice to discover another person with a few genuine thoughts on this subject. Seriously.. many thanks for starting this up. This site is one thing that is required on the internet, someone with some originality.

Pretty! This was an extremely wonderful post. Thanks for supplying these details.

The next time I read a blog, Hopefully it doesn’t fail me as much as this one. After all, I know it was my choice to read through, nonetheless I actually thought you would have something useful to say. All I hear is a bunch of whining about something you could fix if you were not too busy seeking attention.

Hi, I do think this is an excellent blog. I stumbledupon it 😉 I will revisit yet again since I book marked it. Money and freedom is the best way to change, may you be rich and continue to guide others.

I’m amazed, I have to admit. Rarely do I come across a blog that’s both educative and interesting, and let me tell you, you’ve hit the nail on the head. The problem is something that too few folks are speaking intelligently about. I am very happy I found this during my search for something relating to this.

Hi there! I could have sworn I’ve been to this website before but after browsing through some of the posts I realized it’s new to me. Nonetheless, I’m definitely delighted I came across it and I’ll be bookmarking it and checking back frequently!

Great article! We are linking to this particularly great content on our website. Keep up the good writing.

I have to thank you for the efforts you have put in penning this blog. I’m hoping to check out the same high-grade content by you in the future as well. In truth, your creative writing abilities has motivated me to get my own website now 😉

Excellent site you have here.. It’s hard to find good quality writing like yours these days. I truly appreciate people like you! Take care!!

Love how you’ve broken this down so clearly!

Appreciate the in-depth analysis here!

Aw, this was an exceptionally nice post. Spending some time and actual effort to produce a great article… but what can I say… I hesitate a lot and don’t seem to get nearly anything done.

Hi! I simply want to give you a huge thumbs up for your excellent information you have got here on this post. I am returning to your web site for more soon.

I would like to thank you for the efforts you have put in penning this website. I really hope to check out the same high-grade blog posts by you in the future as well. In truth, your creative writing abilities has encouraged me to get my own website now 😉

This web site definitely has all the information I wanted about this subject and didn’t know who to ask.

I like this site its a master peace ! Glad I observed this on google .

After looking over a number of the blog articles on your web page, I seriously appreciate your way of blogging. I book marked it to my bookmark webpage list and will be checking back in the near future. Please check out my website as well and tell me how you feel.

Having read this I believed it was extremely informative. I appreciate you taking the time and effort to put this short article together. I once again find myself spending way too much time both reading and leaving comments. But so what, it was still worth it!

Very good post! We will be linking to this particularly great content on our website. Keep up the good writing.

I wanted to thank you for this wonderful read!! I absolutely loved every bit of it. I’ve got you book marked to look at new stuff you post…

I’ve had problem with blood sugar changes for many years,

and it really influenced my energy degrees throughout

the day. Because beginning Sugar Defender, I feel more balanced and sharp, and I do not experience those afternoon sags any longer!

I enjoy that it’s a natural option that works without any extreme negative effects.

It’s absolutely been a game-changer for me

As a person who’s always bewared about my blood glucose, finding

Sugar Defender has actually been an alleviation. I feel a lot extra in control,

and my current check-ups have revealed positive renovations.

Recognizing I have a reliable supplement to sustain my

regular gives me assurance. I’m so grateful for Sugar Protector’s impact on my health and wellness!

For several years, I’ve battled unforeseeable blood sugar level swings that left me really feeling drained pipes and lethargic.

But considering that integrating Sugar Protector right into my regular, I have actually observed a significant renovation in my total energy and security.

The feared mid-day thing of the past, and I appreciate that this natural remedy achieves these outcomes

with no unpleasant or adverse responses. truthfully

been a transformative discovery for me.

Including Sugar Protector right into my everyday routine general well-being.

As a person that prioritizes healthy and balanced consuming,

I appreciate the additional defense this supplement gives.

Since beginning to take it, I’ve discovered a significant renovation in my energy degrees and a substantial reduction in my desire for undesirable snacks

such a such an extensive influence on my life.

Oh my goodness! Impressive article dude! Many thanks, However I am experiencing problems with your RSS. I don’t know why I can’t join it. Is there anyone else getting the same RSS issues? Anybody who knows the answer will you kindly respond? Thanx.

Howdy! I could have sworn I’ve been to your blog before but after looking at a few of the posts I realized it’s new to me. Nonetheless, I’m certainly pleased I came across it and I’ll be book-marking it and checking back regularly!

Very good information. Lucky me I came across your blog by chance (stumbleupon). I have book-marked it for later!

Hey! Do you know if they make any plugins to help with

Search Engine Optimization? I’m trying to get my blog to

rank for some targeted keywords but I’m not seeing very good success.

If you know of any please share. Thank you! You can read similar blog here:

Wool product

Greetings! Very useful advice in this particular article! It is the little changes that produce the most significant changes. Thanks a lot for sharing!

Greetings! Very useful advice in this particular article! It is the little changes which will make the greatest changes. Thanks a lot for sharing!

Hello! I could have sworn I’ve been to this website before but after going through many of the posts I realized it’s new to me. Anyways, I’m certainly delighted I discovered it and I’ll be bookmarking it and checking back regularly!

Good post! We will be linking to this particularly great content on our website. Keep up the great writing.

I blog frequently and I seriously appreciate your content. This article has truly peaked my interest. I am going to bookmark your blog and keep checking for new details about once per week. I opted in for your RSS feed as well.

That is a very good tip particularly to those fresh to the blogosphere. Short but very precise info… Thank you for sharing this one. A must read article.

It has been changing by 0 priligy precio 5 million American women

It helps children explore their pursuits, build confidence, and develop vital thinking abilities.

Thanks for another informative site. Where else could I get that kind of information written in such a perfect way? I have a project that I am just now working on, and I have been on the look out for such information.

Good post. I learn something totally new and challenging on websites I stumbleupon every day. It’s always useful to read through articles from other writers and practice something from other websites.

You’re so cool! I do not believe I’ve read through anything like that before. So great to find somebody with original thoughts on this subject. Seriously.. thanks for starting this up. This web site is something that is required on the internet, someone with a little originality.

Pretty! This was an incredibly wonderful article. Thanks for providing this info.

bookmarked!!, I really like your web site.

Next time I read a blog, Hopefully it won’t fail me as much as this particular one. After all, I know it was my choice to read through, however I actually thought you would probably have something interesting to talk about. All I hear is a bunch of whining about something you could possibly fix if you weren’t too busy seeking attention.

Great post. I will be going through some of these issues as well..

This is a topic that’s near to my heart… Thank you! Exactly where can I find the contact details for questions?

Hey there! I just want to offer you a big thumbs up for the excellent information you have right here on this post. I’ll be returning to your blog for more soon.

I seriously love your blog.. Very nice colors & theme. Did you develop this site yourself? Please reply back as I’m planning to create my own site and want to learn where you got this from or exactly what the theme is called. Thank you!

I’m very pleased to discover this web site. I need to to thank you for ones time for this particularly fantastic read!! I definitely loved every little bit of it and i also have you saved as a favorite to see new stuff on your website.

This blog was… how do I say it? Relevant!! Finally I have found something that helped me. Appreciate it!

Greetings! Very useful advice in this particular article! It’s the little changes that make the greatest changes. Thanks a lot for sharing!

When I originally commented I appear to have clicked on the -Notify me when new comments are added- checkbox and from now on whenever a comment is added I receive four emails with the exact same comment. Perhaps there is a way you are able to remove me from that service? Many thanks.

If you actually pay attention, you can also make an amazing difference in your price range.

Pay attention. An important thing you can do to decrease

your discretionary bills is to observe your spending. Monitor due dates.

Whether they’re from the bank card firm, the video retailer, or the library, late fees

and overdue fees can add up — and they’re utterly avoidable.

Good info. Lucky me I ran across your site by accident (stumbleupon). I have saved it for later.

Your style is unique in comparison to other folks I have read stuff from. Many thanks for posting when you’ve got the opportunity, Guess I will just bookmark this web site.

When I initially commented I appear to have clicked on the -Notify me when new comments are added- checkbox and now every time a comment is added I get 4 emails with the same comment. There has to be a means you are able to remove me from that service? Appreciate it.

You ought to take part in a contest for one of the best websites on the web. I am going to recommend this site!

최저가격보장강남가라오케강남가라오케가격정보

최저가격보장강남가라오케강남가라오케가격정보

최저가격보장사라있네가라오케사라있네가격정보

최저가격보장선릉셔츠룸선릉셔츠룸가격정보

최저가격보장강남가라오케강남가라오케가격정보

최저가격보장강남셔츠룸강남셔츠룸가격정보

최저가격보장CNN셔츠룸씨엔엔셔츠룸가격정보

You’ve made some really good points there. I looked on the web to learn more about the issue and found most individuals will go along with your views on this web site.

Can I simply say what a comfort to discover an individual who actually understands what they’re talking about online. You certainly realize how to bring an issue to light and make it important. A lot more people need to check this out and understand this side of your story. I was surprised that you aren’t more popular given that you most certainly have the gift.

Very nice article. I definitely appreciate this site. Keep writing!

Good info. Lucky me I found your website by chance (stumbleupon). I’ve saved as a favorite for later!

A 14dpa caMKK6 regenerating heart immunolabeled for GFP green, BrdU red and DAPI blue, the white dashed line indicates the plane of amputation I priligy online thermal balloon endometrial ablation n 42

I must thank you for the efforts you have put in penning this website. I’m hoping to see the same high-grade blog posts from you later on as well. In fact, your creative writing abilities has encouraged me to get my very own blog now 😉

I really like reading an article that will make men and women think. Also, thanks for allowing for me to comment.

Aw, this was an exceptionally good post. Taking the time and actual effort to make a great article… but what can I say… I put things off a whole lot and don’t seem to get nearly anything done.

I blog frequently and I genuinely thank you for your information. This great article has really peaked my interest. I am going to bookmark your website and keep checking for new details about once per week. I opted in for your Feed as well.

Everything is very open with a clear explanation of the challenges. It was definitely informative. Your website is very helpful. Thank you for sharing!

It is very comforting to see that others are suffering from the same problem as you, wow!

After looking over a handful of the blog posts on your site, I seriously like your way of writing a blog. I book marked it to my bookmark webpage list and will be checking back soon. Please check out my website too and let me know what you think.

Great post! We will be linking to this great post on our website. Keep up the good writing.

An impressive share! I’ve just forwarded this onto a co-worker who has been conducting a little homework on this. And he in fact ordered me lunch simply because I discovered it for him… lol. So allow me to reword this…. Thanks for the meal!! But yeah, thanx for spending the time to discuss this topic here on your internet site.

It is very comforting to see that others are suffering from the same problem as you, wow!

You should take part in a contest for one of the highest quality sites on the net. I will highly recommend this site!

You’re so awesome! I do not suppose I have read something like this before. So wonderful to find somebody with some genuine thoughts on this issue. Really.. many thanks for starting this up. This site is something that is needed on the web, someone with a little originality.

I could not refrain from commenting. Well written!

It’s hard to find educated people about this topic, but you sound like you know what you’re talking about! Thanks

You’re so interesting! I don’t think I’ve read through anything like that before. So great to discover someone with a few genuine thoughts on this issue. Really.. thank you for starting this up. This website is something that is required on the internet, someone with a bit of originality.

This website was… how do I say it? Relevant!! Finally I’ve found something that helped me. Thanks.

クラウドクレジットはペルーや東欧諸国などの案件を取り扱っているため、ファンズと比べて表面利回りは4〜13%と高くなっています。

Funds(ファンズ)は、あらかじめ予定利回りと期間が決められた金融商品です。 『クラウドバンク』は、金融商品取引業第1種を取得している、証券会社の日本クラウド証券が提供する資産運用サービスです。 そのため、貸付先の投資リスクはあるものの、上場企業であればIR情報なども確認することができます。

Funds(ファンズ)の仕組み簡単で、投資家である私たち個人は、貸付ファンドを通じて、企業の「貸付け」に対して投資をすることができ、その利息をもとに分配金を得ることができます。 ファンドを組成して運営する企業については、Fundsを運営する株式会社クラウドポートの審査を通過した企業のみで構成されています。

Hello there! Do you use Twitter? I’d like to follow you if

that would be ok. I’m absolutely enjoying your blog and

look forward to new posts.

GT第16話に登場。、劇場版第5作目でフィリップ自身は自分の父親は総統だが、総統はそのことを気づいていないと語った。 「強い男が好き」と言っていたが、それは「強さだけが全てでは無く、自分の恐怖に打ち勝ち、愛そうと言う人を守ろうとする気持ち」が大事であると考えていたに過ぎず、最終的に「また偶然でも良いから花を持って来てね」とファイヤーマグナムに約束した。

23年前(鷹の爪ビギンズ)のある出来事をきっかけに総統と戦っており、その頃は正義の味方らしい正義の味方だったことがNEO第13話で語られている。上述したとおり、ヒーローなのに他人に迷惑をかける事ばかりする鼻つまみ者である一方、NEO第1話では失踪した鷹の爪団を心配してアジトを訪ねたり、壁にかけてあった鷹の爪団のTシャツを着て涙を流すなど、吉田くんからさみしがり屋と言われる一面を持っている。

強引な建国であった1976年(昭和51年)の中央アフリカ帝国建国に際しても祝電を送っている。 2020年(令和2年)- 本社をYOTSUYA TOWERに移転。

2011年7月20日には、「獨一無二」の日本版アルバム「Only For You」で日本デビューした。新日本放送株式会社(しんにっぽんほうそう、略称:NJB、英称:New Japan Broadcasting System,Inc.)は、このうちの一つとして関西政・御召艦には戦艦「香取」が用いられ、横浜を出発して那覇、香港、シンガポール、コロンボ、スエズ、カイロ、ジブラルタルと航海し、2か月後の5月9日にポーツマスに着き、同日イギリスの首都ロンドンに到着する。

3月20日、ラウニオン州カバ市長フィリップ・ シネマトゥデイ (2015年3月6日).

2023年11月24日閲覧。 2015年度、2020年度パ・ 11月8日、奈良県警が銃刀法違反や公選法違反容疑などで追送検する方向で検討していることが報じられた。刑事部長による事件概要の説明が終わり、質疑応答に入ると、民放の記者が「弁録(弁解録取書)の内容は入っているか」と質問した。事件後の7月17日に奈良県警察が押収した、Xがジャーナリストの米本和広宛てに送っていた手紙(後述)には、Xが開設したとされるTwitterのアカウント名が記載されていた。

共同体(たとえば国、都道府県、市町村など)の構成員、参加者としての個人を、私人としての個人と区別する意味で、市民、公民 (citizen) と呼ぶ。 2012年(平成24年)頃には、第46回衆議院議員総選挙の候補者育成のための政治スクールとして、大阪維新の会が開設した維新政治塾。公教育・警察・ もうひとつは、公務員ではない個々の市民が、地域的ネットワークや目的ネットワーク、宗教的ネットワークなどを母体として、ボランティアや寄付金などを原資として行う活動。

「政府が官邸で献花式 追悼式中止で首相ら参加」『産経ニュース』産経新聞社、2020年3月11日。 「東日本大震災追悼式 中止を決定

政府」『産経新聞』2020年3月6日。 「11日の東日本大震災追悼式、中止へ 政府方針」産経新聞、2020年3月3日。 “江戸前の旬 73(単行本)”.

日本文芸社.産経ニュース. 産経新聞社 (2021年3月11日).

2024年2月22日時点のオリジナルよりアーカイブ。 2021年3月9日閲覧。 2020年3月4日閲覧。

相手の回転力を削るスマッシュ・弊社では、相手がある交通事故での損傷で1割から2割の過失割合があったり、車両保険で免責金額分などお客さまの負担を割引けることもあります。 2006年8月頃から明らかになった問題として、消費者団体信用生命保険がある。 そのうち大学の公認団体としては、約200余りの団体があり、学生の自主的な運営によって学内外で活発に活動している。 )が構成する団体又は一の地方公共団体の議会の議員(当該地方公共団体の議会の議員であった者を含む。

2009年3月、リクルート社員(当時29歳)が、くも膜下出血で死亡したのは過労が原因として、社員の両親が国に労災認定するよう求めた訴訟の判決が東京地方裁判所であり、裁判では死亡と過労の因果関係を認め、国の不認定処分の取り消しを命じた。 1948年3月11日:退官。 2019年、子会社のリクルートキャリアで、「内定辞退率」などの個人情報を学生に隠蔽して販売したことが発覚し、政府の個人情報保護委員会より行政処分を受けた。

15日に開催する「メタバース展示会&ライブイベント」で、実際にどんなことが出来るのかをサンプルルームとしてオープンした「士郎正宗inセカンドライフショップ」の展示ルームを使ってお見せします。石墨超一郎 – 勝矢(5・ しかし白金は問題児が多くて名誉が傷付いたことや、五郎が久美子の素性を途中で知りながら校長になっても彼女をクビにしなかったことなどをきっかけに五郎との間に確執が生じ、それが元で白金を五郎に継がせず自身の代で廃校とした。相手からの連絡頻度が多すぎて対応するのが億劫だ、毎回返事を求められるので相手の圧力に精神的に参っている、という場合には相手からの連絡頻度を減らす方向に行動する必要があります。

代々埴之塚家の家臣であったが二代前の婚姻で親戚となり、その主従関係も衰えつつある(が、本能的に付き従う)。

Z1などを踏襲し、従来型にはなかったテールカウルを装着しているのも特徴。 バルカンSが排ガス規制に対応して復活し、ミドルクラスの選択肢を広げてくれることになった。

に合格し、応募者1046人(重複含む)の中から、3人が女子メンバーとして選ばれる。価格は1万1650ユーロ(約185万円)~で発売された。製作したのはイタリアの「Mr.Martini」で、イタリアのカワサキディーラーでの予約販売で2016年にリリースされたもの。契約する火災保険の免責金額をよく確認して、自分にとって最適な金額を設定しましょう。 スポークホイールも設定可能だった。

“2008年8月6日【公式戦】試合結果(阪神vs広島東洋)”.

“2011年8月6日【公式戦】試合結果(広島東洋vs読売)”.

“. 広島球団公式サイト (2014年7月25日). 2015年6月9日時点のオリジナルよりアーカイブ。 “.

広島東洋カープ球団公式サイト (2015年7月19日).

2015年10月21日時点のオリジナルよりアーカイブ。 “1997年から続く「給料デフレ」 – 日本人の貧富拡大”.日本からの情報に依存していたり、自分の周りにLGBTQの人はいないと思い込んでいたりする人たちは、知り合いの娘が同性愛者だと言われたとき何と言えばいいかなど、考えたこともなかっただろう。 スポーツ報知 (2021年6月29日).

2021年6月30日閲覧。

最終更新 2024年8月30日 (金) 04:51 (日時は個人設定で未設定ならばUTC)。後述の経緯から同僚のアカギに憧れ、追いかけようとするが、最終的には仲井編において仲井から彼を追うのを諦めるよう忠告される。大阪府立天王寺高等学校入学後もサッカーを続ける。字高田・字牛ヶ淵・齋藤直人 –

流大 – 福田健太 – 小倉順平 – 李承信 – 松田力也 – 長田智希 – 中村亮土 – ディラン・

対象は建設、農業、宿泊、介護、造船業等、人手不足が著しい12分野。政府は当初5年間で最大34.5万人の受入を企図。技能労働者を確保(労働力不足見通しは78万人から93万人程度)、農業では同2.6万人から8.3万人を確保(同4.6万人から10.3万人程度)、介護では年1万人程度受入れ(同55万人程度)を想定していました。 これが発展し1993年、「途上国の外国人労働者が技能・

Hi there! I could have sworn I’ve been to this website before but after going through a few of the articles I realized it’s new to me. Nonetheless, I’m definitely delighted I came across it and I’ll be bookmarking it and checking back frequently!

不精々々に互に響を合せているに過ぎない。意味もなく縒(より)を掛けて紡錘(つむ)に巻くに過ぎない。英文学科長に着任し、翌年には日本初のラジオ英語講座(現・ しかし、その後も何度も復活しており、最終的にバンシーによって破壊された後にニムロッドに寄生し、バスチオンとして転生を果たす。厚生労働省の調査によると、過去3年間(平成26年7月1日 – 平成29年6月30日)において、使用者側との間で行われた団体交渉の状況をみると、「団体交渉を行った」67.6%(平成27年調査67.8%)、「団体交渉を行わなかった」32.0%(同32.2%)となっている。

投資信託を買う時は、専門家の評価や今後の予想、純資産残高、コストなどを、総合的に見て判断しましょう。基準価額を見ることで現時点での投資信託の価値がわかり、購入時と売却時の基準価額の差が損益となります。 2024年11月21日時点の基準価額3,031,

392円で毎月30,000円を20年間投資したとします。 リアルの会場ではXR技術の専門展「XR総合展」を同時開催しており、メタバースを活用した新たなビジネスチャンスをつくりだせる可能性にあふれた展示会となっています。今の24.61%の利回りで運用されたとすると、20年後の最終運用結果は189,594,727円になります。楽天ETF−日経レバレッジ指数連動型を積立した場合、20年後どうなるかをシミュレーションしてみましょう。

旧商品(プラチナ/ゴールド/パール)の契約につきましては、引き続き契約のご継続(更新)も可能です。新商品(ネクスト/ライト/ミニ)へのプラン移行の詳細につきましては、契約満了日の2ヶ月前に送付されます「保険期間満了に伴う継続契約のご案内」にてご案内しております。名前の由来はケーブルの亡くなった妻の名前から。

“りそな、振り込み24時間に 来年4月、グループ3行”.従来シリーズのベイバトルが一新し、格闘ゲームのようなアクション性の高いバトルになった「格闘ベイバトル」と改名された。 1980年1月に長男を出産し、和彦が「健彦」と命名する。

IT用語としては、パソコン等電子機器の必要最低限スペックを意味する。人権は原則として尊重されるべきで「不可侵」とされているものだが、制限の無い人権同士では矛盾・ この憲法が国民に保障する基本的人権は、侵すことのできない永久の権利として、現在及び将来の国民に与へられる。

“千葉雄大、北村匠海の親友役に 台本冒頭から下ネタ登場で「もう好き」、脚本家は大石静”.

10年後、ウィルとの間に生まれた息子と共に、ウィルとの再会を果たす。概して詞に、言句にたよるに限る。 しっかりした御一言を承らせて下さいまし。宝冠章と同時に瑞宝章も制定されたが、瑞宝章も当初は男性のみを叙勲対象としており、1919年(大正8年)に瑞宝章の性別制限が廃止されるまでは、日本で唯一女性が拝受できる勲章であった。詞の上ではグレシアのヨタの字一字も奪われない。

3月16日 – ブライアン・ 3月11日 – ブライアン・ 3月18日 – ティモ・ 3月18日 –

チャド・ 3月17日 – スティーヴン・ 4月17日 – イ・ 4月14日 – ジョシュ・ 4月1日 – タラン・ 5月1日 – ダリヨ・ 4月1日 – アンドレアス・

二千年にわたって地下世界に封印されていたが、ヴイイが掘り出そうとしていた。真吾たちとの戦いでは、メフィスト二世や家獣などを石化させたが、真吾が作った魔法陣に捕まった後、こうもり猫に挑発され逆上して魂の姿で食いつこうとしたところを壺に入れられ、地の底深くに封印された。村を追われた青年は、埋れ木茂のアシスタントになったことで真吾と知り合い、彼についた「火気」をメフィスト二世が見つけたことから背後にいる魔物の存在に気付き、退治に来た真吾たちの攻撃をバリアで無効化したが、半妖怪の血が流れていてフォービと似た体質を持つ青年にはバリアが効かず、真吾の形態催眠で巨人化した青年との格闘に敗れて退治された。 さらに巨大なボスが地底人の女王の娘を自分の妻にしようと狙っており、真吾の助けを求めてきた彼女を追って地上に現れる。

階級は大尉で、イフリートに搭乗。階級は曹長で、火炎放射器を装備したザクIIに搭乗。実は10日前(9月30日前後)に地球連邦軍の新型モビルスーツ破壊の命を受けていたが、マーチン以外の隊員には知らせなかった。後日、基地近傍で連邦の回収部隊が砂に下半身が埋もれたピクシーを発見するが、パイロットについての言及はない。、これがもといた空挺部隊の名称なのか、ウルフ・ 6月11日 – 同日の営業を持って、県立中央病院出張所が廃止(業務継承店:鳥取営業部)。

安倍晋三など)が主導権握り、2010年代になると、昭和30年代生まれ(前原誠司・中学時代は瑛太と背丈が近い痩身だったが、高校に入っては身長が一気に伸び筋肉質な体格となっている。操舵の技術のみならず近接格闘術にも長けており、カークとともにバルカン星に降下した際は、携帯用の日本刀でロミュラン人を討つ活躍を見せる。 3月31日

– 吉野家HDが、赤字の続く傘下子会社・

自分の神聖な値打を知らずにいるのが不思議です。自惚(うぬぼれ)と鼻の先思案ですよ。自然の配(くば)る賜(たまもの)の一番上等なものですのに。蒼然(さうぜん)とした暮色は、たゞさへ暗い丑松の心に、一層の寂しさ味気なさを添へる。地域包括支援センター、ケアマネジャー、ヘルパーさんなどが、なんとかして支援できるきっかけをつくることをしていかないと、生活がどんどん孤立化、悪化に向かっていくケースが現実問題として増えています。 「避難やっと終わる さくら市喜連川地区 亀裂、豪雨で土砂崩れ」『47NEWS』。同年11月27日、天皇徳仁は前立腺特異抗原(PSA)と呼ばれる前立腺に関する数値にやや懸念される傾向が見られることから、東京都文京区の東京大学医学部附属病院に1泊2日の日程で入院し、前立腺の組織を採取する詳しい検査を受けた。

初めてMLBと契約した選手は前述のとおり、FA権取得まで少なくとも6年間を要する。大阪取引所、大証。当時はまだ海外渡航自由化の前で、大変貴重なニューヨーク訪問となった。地の巻 – マキノ雅弘自伝」、マキノ雅弘、平凡社、1977年、p.225.地の巻 – マキノ雅弘自伝」には「傷だらけの男」でデビューとあるが誤りである。傷だらけの男(1950年、東日興業・ 1950年代から1960年代にかけ、東映の時代劇映画で活躍。東芝日曜劇場・

Very nice article, just what I needed.

コーヒーチェリーと呼ばれる果実は赤または紫、品種によっては黄色の硬い実で、成熟に9ヶ月ほどかかる。

コーヒー豆はその消費目的に応じて数種類混合されることがある。 6疾病については、保険医より同意書の交付を受けて施術を受けた場合は、医師による適当な手段のないものとして療養費の支給対象として差し支えない。今回は、新たに保存したイラストを使うため「インポート」ボタンをクリックします。 コーヒーの原料となるコーヒー豆は、3メートルから3.5メートルほどの常緑低木で、ジャスミンに似た香りの白い花を咲かせるコーヒーノキの果実から得られる。眞須美は、10分くらい後にガレージに戻ってきて、Aとカレー鍋等の見張りを始めた。

1か月の賃金支払額における端数処理として、以下の方法は賃金支払の便宜上の取り扱いと認められるから、第24条違反として取り扱わない。 インド首相の命令で松下一郎が「東方の神童」であるかどうかを見極めるために来日し、ヤモリビトの魂に支配されかけた佐藤を救う。 1982年から千葉県警察本部に交通部交通指導課長として出向し、ひき逃げ事故の捜査などにあたった。難を逃れたデクたち生徒とメリッサは、島の管制権を取り戻すために、タワー最上階のコントロールルームを目指した。上(うえ)の世界の出来事を窺うのです。読み切りというのは、判例の時代背景や事件をとりまく政治的状況はもとより、判例としての積み上げないし発展の歴史さえも、本書の主たる関心事ではないということである。

翌日、和彦から家族で山原で暮らす提案を受けた暢子は、ここで暮らした日々を思い返し、移住する決心をする。承子女王の結婚相手は、優秀な男性のようですね。承子さまの結婚相手、イケメンなんですかね? ✅ 承子さまの交際相手の顔写真は一部週刊誌で掲載されており、細身で身長は175cm前後、短髪であることがわかっています。承子女王の結婚は、まだ具体的な発表はありませんが、今後の動向に注目していきましょう。 2008年に帰国し、早稲田大学に入学して卒業した高宮翔子様は、身長168cmという長身で、ファッションセンスも注目されています。

市外局番は0299で、かすみがうら市の旧・代表的な出演番組・純真無垢な学生時代だったそうですが、初体験はSNSで知り合った46歳のおじさんだった!一般病院の入退院の繰り返しで、そのたびに仕事は首になり、生活も苦しくなるばかりでした。音楽活動・市民の経済社会活動を監視・

experience and quality thank you.esciencejournals

Piece of writing writing is also a excitement, if you know after

that you can write or else it is complicated to write.

自分を救ってくれた王子様であるユナに、友人以上の感情を抱いている節がある。 『へえ、左様(さう)でしたか。末永雄大 (2023年9月8日).

“リクルート出身の有名人・ オリジナルソング配信スタート”.

ブレインスリープ (2023年6月21日). 2023年8月25日閲覧。 ある田舎町に住むドレイトン一家は、大きな嵐から身を守るため地下に避難して一夜を過ごしました。弁護士が丑松に紹介した斯(こ)の大日向といふ人は、見たところ余り価値(ねうち)の無ささうな–丁度田舎の漢方医者とでも言つたやうな、平凡な容貌(かほつき)で、これが亜米利加(アメリカ)の『テキサス』あたりへ渡つて新事業を起さうとする人物とは、いかにしても受取れなかつたのである。

“令和2年度決算概況について(資料編)”.

“令和4年度決算参考データ集”. 2015年からは要介護3以上でないと原則入居不可となったが、国からの助成金や税金の優遇があるため、入居者の利用料は15万円程度と比較的割安だ。 “京都市、28年度にも財政破綻の恐れ… “「京都市は破綻しない」 門川大作市長が会見、市税が過去最高「財政難克服に道筋」”.乃木坂46 黒見明香 公式ブログ、乃木坂46公式サイト.特別会計の内訳は母子父子寡婦福祉資金貸付事業、国民健康保険事業、介護保険事業、後期高齢者医療、中央卸売市場第一市場、中央卸売市場第二市場・

免責ゼロで契約することも可能です。免責ゼロにして、全額補償してもらう契約も可能です。 ちなみに初回免責ゼロにすることが可能で、この場合さらに保険料は高くなります。 メタバース空間は3DCGで構築できるからこそ、現実では不可能な演出も可能になります。 ただし事故を起こした場合、自己負担分が大きくなるので注意が必要です。大切な方への手土産に、自分へのご褒美に。 では、免責分はどのように支払うのか、疑問に思う方もいるかもしれません。高円宮妃久子さまと長女承子さまがヨルダンのフセイン皇太子の結婚式に参列するため、今月末~6月3日の日程で同国を公式訪問されることが、9日の閣議で了解された。

彼は海賊船ファンシー号でヨーロッパの弱小貿易船や富裕な貿易船を略奪する一方で、モンセラートやハイチなどのフランス領カリブ海植民地で旧世界と新世界の間で貿易を行っていた。 ビジャヌエバ船長のスペイン宝物船団を略奪したこともある。突然変異により異形化した生物、目を奪われる自然など、狂気と美しさが両立した世界観が魅力です。 これは(加齢によって棋力の低下した40~60代のタイトルを持たない七~九段と、20~30代のタイトルを持つ/持たない七~九段が対局する場合に)親子に近い年齢差のある先輩棋士を下座に座らせることを後輩棋士がはばかるため生じる。

幽(かすか)な呻吟(うめき)を残して置いて、直に息を引取つて了つた–一撃で種牛は倒されたのである。他の二頭の佐渡牛が小屋の内へ引入れられて、撃(う)ち殺されたのは間も無くであつた。屠手の頭は手も庖丁も紅く血潮に交(まみ)れ乍ら、あちこちと小屋の内を廻つて指揮(さしづ)する。大学の頭ですらも。それから守は宗教に志し、渋谷の僧に就いて道を聞き、領地をば甥(をひ)に譲り、六年目の暁に出家して、飯山にある仏教の先祖(おや)と成つたといふ。屠手の頭は鋭い出刃庖丁を振つて、先づ牛の咽喉(のど)を割(さ)く。 そこには竹箒(たけばうき)で牛の膏(あぶら)を掃いて居るものがあり、こゝには砥石を出して出刃を磨いで居るものもあつた。間も無く蓮太郎、弁護士の二人も、叔父や丑松と一緒になつて、庭に立つて眺めたり話したりした。

I enjoy looking through an article that can make men and women think. Also, many thanks for allowing me to comment.

The very next time I read a blog, I hope that it doesn’t disappoint me just as much as this one. After all, Yes, it was my choice to read through, but I truly believed you would have something interesting to talk about. All I hear is a bunch of whining about something you could fix if you were not too busy seeking attention.

experience and quality thank you.escientificreviews

2021年12月1日、医療用医薬品事業をテーマとした企業ブランディングキャンペーンCMの放送を開始。自社でメタバースを構築する場合、社内に制作できる人材がいなければ外注が必要です。 ナイスコレクション記者も初日に会場を訪れ、さまざまな展示を取材してきました。出演者名の横に「★」が付いている人物は故人。 1922年(大正11年)に創部した歴史ある部で、堀田弥一が隊長を務め日本人として初めてヒマラヤ山脈での登頂(ナンダ・

Very good post! We will be linking to this great post on our site.

Keep up the great writing.

FX取引ができない土日は、 FXに関する知識を深めたり、反省点を洗い出したりする良い機会となります。 また、土日の取引できない期間は、 トレンドサインや定番のチャートパターンの特徴を学習するなどして、チャート分析に必要な知識を深めましょう。 リスクを回避したい場合は、

土曜日の朝までに取引を終了しておくことをおすすめします。日本が平日、米国が祝日のときにドル円取引をする場合、 為替相場は小動きになる傾向にあります。土日のバーレーン市場における為替相場は、「中東レート」や「未来レート」と呼ばれており、動きを予測するのは困難です。 なぜなら、 土日でも中東のバーレーン市場などが開かれており、為替取引が行われているからです。 7月9日 –

東京・

experience and quality thank you.openaccesspublications

専用のスマホアプリの操作によって、あらかじめ登録したゆうちょ銀行口座から代金を即時に引き落とす、銀行口座直結型のサービスである。 2008年(平成20年)5月下旬より、ゆうちょ銀行直営店(82店舗)にてメガバンク・ 2009年(平成21年)5月7日より、ATMからの定型メッセージの送信機能を一時停止したが、こちらは同年8月24日より再開している。

」』 – コロプラ、2013年(平成25年)7月9日配信開始。

そこで今回新しく、プログラミング技術や知識がなくても、簡単にメタバース空間上で展示会開催ができる「メタバース展示会メーカー」を開発いたしました。 コミュニケーションズ(略称:エネコム、本社:広島市、取締役社長:渡部 伸夫)は、新たなサービス領域へのチャレンジとして、広島発XR技術※1開発企業である株式会社ビーライズ(略称:ビーライズ、本社:広島市、代表取締役:波多間 俊之)と連携し、2023年2月10日から新サービス『メタバース展示会メーカー』の提供を開始します。 19話、40話登場。 “加藤衛”.小泉内閣が自衛隊イラク派遣を行い、聖域なき構造改革と称して政治や経済のアメリカニゼーションを一層強めた。

水戸黄門 第39部 第15話「ホラ吹き娘の親孝行!

「内藤剛志主演『警視庁強行犯係 樋口顕 Season2』に渡辺徹、加藤諒、島崎遥香らが出演 第3〜5話のゲスト解禁」『TV LIFE web』ワン・ ハイビジョン特集 少女たちの日記帳 ヒロシマ 昭和20年4月6日〜8月6日(2009年8月6日、NHK

BShi) – 主演・

experience and quality thank you.jpeerreview

experience and quality thank you.escientificres

入学後に申し込むタイプの奨学金の中には、経済的理由により修学が困難な学生を支援するための経済支援奨学金や国際化戦略の一環として、学生が積極的に留学プログラムに参加するよう設置された「グローバル奨学金」などがある。 マルガレエテ(床の中に隠れむとしつゝ。明石家さんま 同局系列の不仲を暴露「TBSと毎日放送は特にひどい」 ライブドアニュース 2016年8月1日、同16日閲覧。

2016年:真赤激! その後、坂本達の脱出作戦に協力するが、自分の保身のみを考えて土壇場で裏切りを繰り返し、坂本達が集めたチップを全て使い最初のゲームクリアを達成するが、最後は逆上した若本に射殺される。

損害保険大手は割安な10年の契約を廃止し、5年ごとの更新に短縮する。幼い頃に読んだ「ニケの冒険譚」に登場する主人公ニケに憧れ、旅をする魔女を志す。 “CHARACTER”.

MARS RED 〜彼ハ誰時ノ詩〜 公式サイト.極』公式サイト.

セガ. 『帰ってきた 名探偵ピカチュウ』公式サイト.株式会社ポケモン.

“. 【公式】共闘ことばRPG コトダマン (2022年3月30日). 2022年3月31日閲覧。 “.

【公式】共闘ことばRPG コトダマン (2022年4月20日).

2022年4月23日閲覧。

現在のウズベキスタンへの最初の移住者はスキタイ人と呼ばれる東イランの遊牧民で、フワラズム(紀元前8〜6世紀)、バクトリア(紀元前8〜6世紀)、ソグディアナ(紀元前8〜6世紀)、フェルガナ(紀元前3世紀〜紀元前6世紀)、マルギアナ(紀元前3世紀〜紀元前6世紀)に王国を建設したと記録されている。 13世紀、モンゴル帝国の侵攻により、クワラズミー王朝と中央アジア全体が壊滅し、その後、この地域はトルコ系民族の支配を受けるようになった。 ソ連時代からの巨大な発電施設と豊富な天然ガスの供給により、ウズベキスタンは中央アジア最大の電力生産国となっている。 ウズベク経済は市場経済への移行が徐々に進んでおり、対外貿易政策も輸入代替を基本としている。

久美子の地道な説得から心を揺り動かされる中、後に芝山の冷酷非道ぶりに恐れを為して「学校に行きたい」と言ってグループからの脱退を求めたが、芝山の怒りを買うことになり助けに来た3-Dのクラスメイト共々袋叩きにされてしまう。 5月10日にはニューヘイブンでアイビーリーグ優勝校のイェール大学と対戦。武装はイサリビと同位置にある主砲5基と対空砲2基、艦首装甲部のミサイル発射管12門、対艦ナパーム弾など。斯(こ)の庭に盛上げた籾の小山は、実に一年(ひとゝせ)の労働の報酬(むくい)なので、今その大部分を割いて高い地代を払はうとするのであつた。現代はストレスが多い社会と言われていますが、単にストレスといっても、人によって捉え方が違うようです。

Hmm it seems like your blog ate my first comment (it was super long) so I guess I’ll just sum it up what

I submitted and say, I’m thoroughly enjoying your blog.

I too am an aspiring blog blogger but I’m still new to the

whole thing. Do you have any recommendations for newbie blog writers?

I’d really appreciate it.

でもさぁやっぱ京楽隊長って人脈あるから、結構参加者がいるんだよね。急激な降圧は脳虚血を起こしやすいため、容量を調節しやすい静脈薬で開始1時間以内で平均血圧の25%以内、次の2〜6時間で160/100〜110mmHgを目標とする。 【NISA】1年の投資金額がそれぞれ投資枠の上限未満であった場合、残りの枠を翌年以降に繰り越すことはできますか。 【NISA】金額はいくらまで投資できますか。 2020年にNISA口座にてご購入の投資信託は2024年12月末に非課税期間が終了します。 【NISA】簿価(=取得価額)が800万円のものが値上がりし1,000万円で売却した場合、1,000万円分の非課税限度保有額を再利用できますか。

私が生まれたのは、山口県下関市です。私が初めて酒を飲んだのは高校2年生の時で、友達六人とスナックで飲みました。 その頃の酒の飲み方はごく自然で、コミュニケーションを取る為の手段として、楽しいものでした。会社は順調でしたが、発泡スチロールの出現で、次第に魚箱が押されるようになりました。第13回ブルーリボン賞主演女優賞を受賞する。毎日が酒浸りの日々で、仕事にも行かず酒屋の前で、あたかも自分がボスのような存在で、黙っていても酒や金が集まるようになりました。

その内、酒害教室を終えた小杉先生が帰って来られ「この方は明日入院させます」と言われ、柏原の記念病院に入院しました。

エルメス同様、両腕を倒さない限り本体への攻撃は一切通じない。本体への攻撃は一切通じず、ビットを全て倒すと一時撤退してシャア専用ゲルググと入れ替わる。一旦撤収したウルフ・経済の問題は計量可能なものが多いため、イデオロギーや価値の対立に煩わされることが最も少ない。量産機と違いビームナギナタを両刃とも使用、回転させながら振り回す。 その性能は高く敵軍を瞬時に一掃し、ボルク自身もこれが量産されれば戦争があっという間に終わると驚愕する。 しかし私は、施策化においては医療施策と福祉施策をきちんと区別し、福祉施策は障害者施策として一本化する必要があると考えています。

塩もみは、うまみが凝縮する、火通りが早い、日持ちする、調理のたびに切る手間が省ける、塩味がついているので調味が決まりやすい、炒め物た後に時間が経っても水が出てきにくい、独特なシャキシャキの歯ざわりが魅力など、たくさんの利点があります。 “”電力不足”懸念にひろゆき氏「原発稼働すればいいだけ」なぜ動かせない? “ひろゆき氏「原発を動かす以外ない」エネルギー”ロシア依存”解消の動きに持論”. アルバムの売上不振を理由に、未発売となる。従って、一般国民が利用する実店舗に赴くことは出来ず、物品の購入方法は外出が不要な百貨店のカタログ持参による外商やAmazonのような通信販売を利用することが大抵である。

2022/6/29 【公式】「ヴィレヴァンちゃんねる@HIT」Vol.5後編 VVmagazine チャンネル

ゲスト出演(内田・ 2022/6/29 【公式】「ヴィレヴァンちゃんねる@HIT」Vol.5前編 VVmagazine チャンネル ゲスト出演(内田・

Football チャンネル ゲスト出演(内田・

考へて見ると、うか/\して一年暮して了つた–まあ、僕なぞは何(なんに)も為なかつた。 あゝ今年も僅かに成つたなあ。 1月1日 – 『全国高等ラグビー大会』のテレビ放送を、この年の第57回から毎年放送(この年は、同月9日まで隔日毎に放送、決勝戦は全国33局ネットで放送。其時迄、黙つて二人の談話(はなし)を聞いて、巻煙草ばかり燻(ふか)して居た準教員は、唐突(だしぬけ)に斯様(こん)なことを言出した。学校の職員の中に一人新平民が隠れて居るなんて、其様(そん)なことを町の方で噂(うはさ)するものが有るさうだ。民主新党クラブ・

三重交通グループホールディングス・重要なのは、1998年度末の長期債務は533兆円とここ15年でほぼ倍増していることだ。債務に見合った金融資産をもたず、国と地方の長期債務残高が977兆円(2013年度末見込み)ある。国が保有する資産の内、金融資産は428兆円(現金・預金17.7兆円、有価証券97.6兆円、貸付金142.9兆円、運用寄託金110.5兆円、出資金59.3兆円)である(2011年度「国の財務書類(一般会計・

保険料控除の対象となる場合は保険会社から「控除証明書」が発行され秋ごろに登録の住所に郵送で届くことが多いようです。製品カタログやチラシなど、気になる資料はダウンロードをして、じっくり検討できます。 “サンリオピューロランド、人気アトラクションを改装–8億円投入。最終更新 2024年5月22日 (水) 22:24 (日時は個人設定で未設定ならばUTC)。 “サンリオピューロランド「KAWAII KABUKI」一部内容を変更して上演再開”.括弧内は駅番号を示す。柴田忠男『生命保険-その仕組みから年金・

Howdy, I do believe your site could possibly be having web browser compatibility problems. When I look at your website in Safari, it looks fine however when opening in IE, it has some overlapping issues. I merely wanted to provide you with a quick heads up! Other than that, great blog!

Subsequent data, however, indicated that it may actually occur in as many as 5 15 of patients with HTN cost cheap cytotec without prescription

I must thank you for the efforts you have put in writing this blog. I am hoping to view the same high-grade blog posts by you in the future as well. In fact, your creative writing abilities has inspired me to get my own site now 😉

experience and quality thank you.lexisjournal

experience and quality thank you.theresearchpub

experience and quality thank you.scholarlyjournals

クリック20世紀「吉田首相、南原東大総長の全面講和論を「曲学阿世」論と非難」 2013年1月27日閲覧。 「対日講和問題に関する周恩来中国外相の声明」 東京大学東洋文化研究所田中明彦研究室「サンフランシスコ平和会議関連資料集」所収。原稿は、外務省(1970年118~122ページ)、田中(刊日不明)で閲覧可。

この場合、保険会社は多数の事故調査と保険金支払いで大きなリスクを負うでしょう。

そのため「軽度の事故には保険金を支払わない」という契約を、保険会社はあらかじめ契約者と結ぶのです。

この免責金額、火災保険へ加入する人にとっては不利なように聞こえます。火災保険に加入する際や保険金を請求する際は免責事由をよく理解し、不正行為に加担しないよう注意しましょう。免責(事由)とは、保険金が支払われない条件を示すものです。

高いデザイン性の国内ブランド。 “警護隊所属の将校、銃弾を胸に受け死亡 比大統領府の敷地内で”.

また、第8話は30.0%、最終回は32.5%を記録、土曜ドラマでは「家なき子2」(1995年放送)以来の視聴率30%超えを達成するなど、平成中期の最大のヒット作となった。男性、54歳、会社員、初期BIMタイプはクラッカータイプ。 “サウジ、女性の運転解禁へ 歴史的決定、2018年6月から”.

“北朝鮮への渡航禁止=マレーシア”.

“クルド自治区の中心都市発着の国際便、29日から運航停止へ イラク”.

experience and quality thank you.dentistryinsights

experience and quality thank you.enginsights

其朝は遅くまで寝て居た。 ちひさな冬の蠅は斯の部屋の内に残つて、窓の障子をめがけては、あちこち/\と天井の下を飛びちがつて居た。丑松が未だ斯の寺へ引越して来ないで、あの鷹匠町の下宿に居た頃は、煩(うるさ)いほど沢山蠅の群が集つて、何処(どこ)から塵埃(ほこり)と一緒に舞込んで来たかと思はれるやうに、鴨居だけばかりのところを組(く)んづ離(ほぐ)れつしたのであつた。思へば秋風を知つて、短い生命(いのち)を急いだのであらう。 「シビュラの意思」である犯罪係数を無視したエリミネーターによる執行を受け入れる姿勢を見せるものの、灼の意志によって正確な計測の上での執行がなされ、犯罪係数288時点でパラライザーによって無力化され逮捕、留置所に収監された。 メタバースの展示会、すでに成果は出ている!

experience and quality thank you.eclinicaljournals

大平原の先住民族の伝統的な携帯保存食のペミカンは世界各国の南極探検隊にも採用された。 “知人女性にわいせつ容疑 TBSテレビ報道局社員を書類送検(TBS NEWS DIG Powered by JNN)”.

そのため、血栓性とみられる場合には抗凝固薬を用いながらグリセリン(グリセオール™)やマンニトール等で血漿浸透圧を高めて脳浮腫の軽減を、発症24時間以内にエダラボン(ラジカット™)でフリーラジカル産生の抑制を図る。 ガー隊のサキがミデアに潜入して自爆した事で爆発に巻き込まれて死亡した。 ガー隊に編入される。

experience and quality thank you.biochemjournals

experience and quality thank you.escienceopen

ちなみに受託者賠償責任保険では、預かり主に対する責任に関しては、日本国以外の法を適用する余地があるが、実務上は行われていない。大阪工業大学 八幡工学実験場(2018年10月29日閲覧)。大阪市

(2017年10月14日). 2019年10月27日閲覧。 りそな銀行、近畿大阪銀行と産学連携基本協定を締結しました 大阪工業大学(2018年10月28日)2018年10月29日閲覧。単行本第1巻, 表紙カバー裏.

この時の一連のエピソードは24年後の2019年にて、平成時代における最後の国会での施政方針演説であった、「”平成31年1月28日 第百九十八回国会における安倍内閣総理大臣施政方針演説”.

experience and quality thank you.scholarsresjournal

置かれている状況も性格も全く異なる彼らには、誕生日以外にも共通点があった…

“ロシアと民族”. 朝日新聞.新党・自由と希望・地球の歩き方 – ロシアの旅行・在ロシア日本国大使館 (日本語)(ロシア語) ロシア各地の総領事館は「リンク」を参照。 “安全保障理事会”.

この度、初開催となる本催事の開催概要及び、展示会への出展者の募集をお知らせいたします。

experience and quality thank you.oajournal

experience and quality thank you.pathologyinsights

experience and quality thank you.alliedjournal

experience and quality thank you.emedicinejournals

experience and quality thank you.pulsusjournal

experience and quality thank you.oajournalres

experience and quality thank you.environjournal

1951年からは中日ドラゴンズに出資(これにともない、球団名を「名古屋ドラゴンズ」に変更)、中日新聞社と隔年で球団経営を行ったが、3年で撤退。具体的には、「父兄並びにご来賓の皆様方」「氏名並びに電話番号をお書きください」のように使われます。 また、「いちご及びバナナ、並びにハム及びソーセージ」のように、小さい括りは「及び」を使い、大きい括りは「並び」を使うという区別もできます。例えば、「いちご及びバナナ、並びにハム」といった具合です。 「異文化コミュニケーション」「環境コミュニケーション」「言語コミュニケーション」「通訳翻訳コミュニケーション」の4分野を複合的に研究。 どちらも複数のものごとから1つを選ぶ際に使う言葉で、一般的にはほとんど同じ意味の言葉として使われています。細かい違いを挙げるとすれば、「または」は数学的には「すべて」も表すという点があります。

ロケーション場所に着いたのは5月3日だが、その日の夜まで外出禁止令が出ていた。日出女子学園高等学校卒業、明治学院大学文学部英文学科中退。仲間をはじめ、第1シリーズに出演した沢村や生瀬も出演、ゲスト出演には過去の生徒役も多数出演している。石母田史朗さんと折笠富美子さんが十数年ぶりに再会”.後年は、舞台・平成24年度刊愛知県統計年鑑によると、平成22年度に築港線・

つまり、保険金額の上限は「再建築価額の50%」までとなり、全壊しても新しい家を建て直すほどの金額はもらえない。 ハウスメーカーや銀行で紹介された火災保険の保険料が高いので他の保険会社を探したい、そもそも紹介されていないのでどこの火災保険に入ったらいいか分からない、など新しく入る火災保険を探している事情は様々にあると思います。最近開催されたBtoBのメタバース展示会の例をご紹介します。商業地の公示地価変動率は東京都で-1.9%(銀座8丁目では-12.8%)、愛知県で-1.7%(名古屋市中区錦3丁目では-15.2%)、大阪府で-2.1%(道頓堀では-28.0%で最大下落率)となっていて、各都心部商業地の下落が顕著である。

平成28年4月18日、登志さんに連れられ金岡中央病院に行く事になった私ですが、着いた途端に担当の髙野善博先生から(前田さんは、アルコール依存症ですよ)と診断を受ました。校長は佐々井宏平。京都帝国大学法学部卒業後、高等試験行政科をパスし1925年、同郷の政友会代議士・

※ただし注意点として、法律違反事故を起こした場合、また警察の事故証明が取得できない場合などは、免責補償制度の対象外となります。

“カザフスタンが表記文字を変更、ロシア文字からローマ字へ”.政府は公共事業によって景気を下支えするべく財政黒字から財政赤字路線へと転換。 “クルド自治政府議長が辞意表明、独立住民投票強行で批判集まる”.

“ブルンジ、国際刑事裁判所から脱退 加盟国初 人道に対する罪の調査は継続”.

その後、典子女王が赤い小袿と紫色の長袴に着替え、国麿に続いて拝殿内に入った。日本では、労働組合と会社間の交渉のうち、特に日本国憲法第28条及び労働組合法によって保障された手続きにのっとって行うものをいう。

「少ない金額なら保険を使わずに自分で払って、翌年以降の保険料を上げたくない」「保険は万が一の大きな事故のときのために入っているから、毎年の保険料はなるべく安くしたい」と考えるのであれば、出せる範囲で免責を高くして節約できます。契約するときに払うお金と、万が一のときに出せるお金をよく考えて免責金額を決めましょう。車両保険を使って保険金を受け取ると、翌年の等級が下がり、保険料が高くなるからです。 「自己負担をしたうえに保険も使って、翌年から保険料が上がるのは嫌だ」「将来の保険料アップより、いざという時にすべて保険でまかなってくれる安心感がほしい」と考えるのであれば、免責金額を少なくするのもいいでしょう。

ワンダー・ガールズ 東方三侠2(ロー署長〈ダミアン・ ワンダー・ガールズ 東方三侠(ロー刑事〈ダミアン・ 『忍ぶ雨』で人気の演歌歌を突然襲った病魔と三度改名の理由に涙腺崩壊… その緒方とは2学期に荒高から赤銅に転校してから偶然再会し、その際には彼らから久美子のように信用できる大人もいるなどと大切なことを教えられたが最初は一切信じなかった。国際FA選手の契約可能期間は例年1月15日から12月15日までで(2024年時点)、期間初日にボーナス・

『新生マルナカ14店舗にて「誕生祭」を開催』(プレスリリース)株式会社ダイエー、2019年2月27日。 1984年(昭和59年)退社してフリー転向(賢プロダクション所属)、同時に関東独立テレビ局『中央競馬ワイド中継』(日曜)『中央競馬ハイライト』立ち上げに参加。 )は戦後地銀ないしその後継行。帰国後はクラシカル・クロスオーバー歌手として地位を確立した。 その後、フライング・ダッチマン号に乗り込んできたウィルと再会。

高窓宮嗣子様は学習院女子大学国際文化交流学部に進学されましたが、中退後にイギリスのエディンバラ大学に留学されました。大熊砂絵子 –

1988年(昭和63年)入社。一晩寝るための場所を探し、比較的元の形を保っている王宮に入ると、誰もいないと思っていたそこに一人の女性が現れる。誰を一番えらいと思いますか。英雄の一種族を名の揚がるように育てたのだ。 その外詩人の材料になった人達を育てたのだ。一人の働が皆の誉になるのだね。所得税では、同一生計家族に支払う給与は原則として必要経費として認められないが、青色申告者が青色事業専従者に支払う適正な給与は事前届出の範囲内で認められる(白色申告者には、事業専従者控除がある)。

アラブ首長国連邦、ドバイの超高層マンション、ザ・日産自動車連合と三菱自動車が、トヨタ自動車や独フォルクスワーゲンを抜いて、売り上げ首位。世界自動車大手各社の2017年上半期の販売実績がこの日までに出そろい、ルノー・ アベノミクス以降の昨今では、個別株式、日経平均やTOPIXのETFも活発に売買されていますが、それ以上に日経平均やTOPIXの2倍の価格変動特性を持たせたレバレッジETFの売買が人気となっています。

人間関係学研究科 | 中京大学大学院・北朝鮮脱出者29名が北京の日本人学校に駆け込む。

WRC(世界ラリー選手権)が日本で初めて開催される( – 5日)。 9月19日 – 江沢民が中央軍事委員会主席を退き、胡錦涛が中国の共産党、政府、軍の全権を掌握。 9月1日 – ロシア北オセチア共和国でベスラン学校占拠事件起こる( – 3日)。

翌朝、武装勢力4人が人質を取って立てこもっていた建物に特殊部隊が突入。 パキスタンの首都イスラマバード近郊の軍司令部の施設に侵入しようとした武装勢力と兵士が検問所において銃撃戦。閉鎖 – 遊底が薬室を閉鎖し、撃発できる状態とする。 10月1日 – イランがイランの核開発問題のある中、ウラン濃縮を継続していることについてジュネーヴにおいて国際連合安全保障理事会・

Everything is very open with a precise clarification of the challenges. It was truly informative. Your site is very helpful. Thank you for sharing!

This is the right web site for anybody who wishes to find out about this topic. You understand so much its almost hard to argue with you (not that I really will need to…HaHa). You definitely put a fresh spin on a topic that’s been discussed for decades. Excellent stuff, just wonderful.

、1981年版ではラムと同様に青みがかった配色をしている。 また、劇場版第9弾では過去にヒーロー達にとっては憧れの存在であるジャスティス・日本の職場の雰囲気について、特に建設コンサルタントについて知ることができました。入場者特典として登場人物の設定資料、共にジャンプ作家である原作者・最終更新 2024年11月15日 (金) 01:27 (日時は個人設定で未設定ならばUTC)。

文平は又、鋭い目付をして、其微細な表情までも見泄(みも)らすまいとする。 まあ、君だつても、其で「懴悔録」なぞを読む気に成つたんだらう。

『瀬川君、何か君のところには彼の先生のものが有るだらう。

あの先生のやうな人物が出るんだから、確に研究して見る価値(ねうち)は有るに相違ない。 『御気の毒だが–左様(さう)君のやうに隠したつても無駄だよ』と斯う文平の目が言ふやうにも見えた。怒気(いかり)と畏怖(おそれ)とはかはる/″\丑松の口唇(くちびる)に浮んだ。丑松は笑つて答へなかつた。流石(さすが)にお志保の居る側で、穢多といふ言葉が繰返された時は、丑松はもう顔色を変へて、自分で自分を制へることが出来なかつたのである。

知られているものを超えようとするまさにこの決意は、孫正義やジェイミー・ 13話まで室井深雪名義 14話のみ新芸名の下に「(室井深雪改め)」と表記されていた。、単身地球に降下したクワトロがディジェに搭乗するためアムロは本機に搭乗し、連携してゲーツ・

社会実情データ図録 Honkawa Data Tribune.総理自体は社会党であるものの早くも政権に復帰した。 7月17日 – 同日の営業をもって鹿島支店、片江代理店、吉田代理店、仁万支店、久手出張所、用瀬支店が廃止(業務継承店:法吉出張所(法人業務については北支店)・

2019年(令和元年)11月17日、三女の守谷絢子と守谷慧との間に初孫の男児が誕生した。日本再共済生活協同組合連合会の会員である、元受共済事業を行う共済協同組合・

なぜなら、 中東のバーレーン市場などを除く世界各国のマーケットが、土日に一斉休場するからです。 その後K.Vは空気感染によって世界中へと拡散し、人類のほとんどが死滅する。

experience and quality thank you.jpeerres

experience and quality thank you.peerreviewjournal

その制作発表に、石井ふく子プロデューサー、宮﨑あおい、瑛太、松坂慶子、松重豊が登壇した。瑛太と松重が石井作品に出演するのは今回が初めて。 ドラマプロデューサーの石井ふく子氏が、9日に放送されるテレビ朝日系トーク番組『徹子の部屋』(毎週月~金曜13:00~)に出演する。 「照宮樣の御殿を宮城內へ御造營」『東京朝日新聞』朝日新聞社、1931年10月31日。宮﨑演じる小野寺理紗は、4年前の結婚式当日に新郎に逃げられた過去を持つ。 5月29日にヨルダン入りしたお二人は、パレスチナ難民キャンプやユニセフの教育支援施設を訪れて子どもたちと交流し、職員を労われた。

1882年(明治15年)には、ピットマンは立教学校の校長として築地の校舎などを設計したガーディナーと結婚。妻子を喪った武彦王は精神疾患を発症し、梨本宮家の規子女王との再婚も破談となった。女性は嫌われたくないと言う思いが強い生き物だから、はっきりと自分が思っていることを伝えるのが男性より苦手なのだ。虹来『にじこ』で)相談して励まされるが、不満げでさらに優に相談する(そのあとで優は、彼女とネガポジ両コンビを誘い屋上ミニパーティをする)。 また、新郎新婦の親族の座席の配置は、皇族の方が身分が高いため通常とは左右が逆になった。

告知ルールが自発的申告から質問応答義務へ変更など)。

ここから全部で将校920人と兵士4万2607人が、師団を離れた任務を与えられ、残りの将兵29万3206人が、4軍に分けられた。戦争が始まった時のオスマン帝国の戦闘態勢は、全体で将校1万2024人、兵士32万4718人、動物4万7960匹、大砲2318門、機関銃388丁を擁していた。 イタリアがオスマン帝国に対し重大な軍事的勝利をおさめたので、バルカン諸国もオスマンに対する戦争に勝利できるだろうという想像を抱いた。

毒を調合したり、匕首(あいくち)を研いだりします。

「ゆする」と読んだ場合は脅して金品などを巻き上げるというニュアンスになります。凍えて煖まろうとして、日を跡に逃げるのです。日刊スポーツ (2009年10月26日).

2014年2月7日閲覧。日本の多くの医者は無頓着である。学生の頃、斉藤と同じ席に座っていた生徒(名前も同じ斉藤)に思いを告げられなかった事を後悔しており、斉藤に手紙と肉体関係を迫った。幼児の注意持続時間も年齢とともに上がっていくので、一見移り気な3歳児の行動は年齢相応の注意や興味の持続とも関係があると思われる。

受益権を東京証券取引所に上場しており、取引時間中であればいつでも売買が可能です。 2:中京テレビ製作。 なお、これ以前からFROGMANとは交流がありそれが縁で『水曜どうでしょう』DVDのオープニングアニメが製作されている。午前9時以降であれば、仲卸売場に立ち入りが出来るようになります。祖父(『江戸前の旬』本編では故人)の代からの蒔絵師。希望の父親の代理人。 「T&Dホールディングス次期社長の上原氏、「ペット保険、早期に損保化」」『日本経済新聞』2018年3月15日。火災保険では、生命保険の保険金とは異なり、実損てん補(あらかじめ定めた保険金額を上限に実際の損害額が保険金として支払われること)で保険金が支払われることが基本となっています。

身体障害に関しては、損害額が2倍となると発生確率は約3分の1になると保険会社が見ていることが分かる。地震や津波、噴火による損害は車両保険の免責事項に該当しますが、「車両全損一時金特約」を付帯していると車が全損した場合に保険金が支払われます。 “. 金曜ロードシネマクラブ.土曜朝6時 木梨の会。同い年でトップの智司とはタメ口で対等な立場でつるんでおり、三橋たちに出会うまでは仲も良かった。 トイレットペーパーやティッシュペーパーの衛生用紙の3月の国内出荷量が前年同月比27.8%増の20万522トンになり、開始した1989年以来単月で過去最高となった。

ねとらぼ (2020年6月16日). 2022年12月10日閲覧。

【明日5月16日のなつぞら】第40話 なつの東京行き

泰樹、とよに願い出る…最後に、『覆水盆に返らず』、確かにやってしまったことの取り返しはつきませんが、それを教訓として、立ち直れるのも又、人間だと思います。最終更新 2020年12月9日 (水) 16:09 (日時は個人設定で未設定ならばUTC)。丑松は先づ其詑(そのわび)から始めて、刪正(なほ)して遣(や)りたいは遣りたいが、最早(もう)其を為(す)る暇が無いといふことを話し、斯うして一緒に稽古を為るのも実は今日限りであるといふことを話し、自分は今別離(わかれ)を告げる為に是処(こゝ)に立つて居るといふことを話した。

“. 多摩市役所 (2020年2月27日). 2020年10月4日閲覧。 この場所で粗忽があってはならないのだ。突然まぶたを開いた父が私の手を握り、「忠、先んずれば人を制す。先ず、お前さんは美少年だ。 それらの事から、同年5月6日の院宣に出てくる「去月廿日の御消息」は編纂者の元にはなく、しかしそれに言及しなければ話はつながらないと想像で補った(偽造した)ものであろうと見られている。 マクドナルドは、密入国者として崇福寺大悲庵に収監されながらも、彼の誠実な人柄と高い教養が認められ、長崎奉行の肝入りで英語教室を開き、日本最初のネイティブ(母語話者)による公式の英語教師とされる。

“夢のタイムマシン”. サンリオピューロランド (2006年).

2006年6月22日時点のオリジナルよりアーカイブ。 “夢のタイムマシンからのお知らせ”.

サンリオピューロランド (2011年11月21日).

2012年3月4日時点のオリジナルよりアーカイブ。 7000台を超える政府の一般公用車を、3年で全て低公害車にするとの方針を明らかにすると、民間企業は低公害車開発を加速しました。 1月1日 土屋自動車商会を買収。 この名は、ルーシの北東の辺境地に起こったモスクワ大公国がルーシ北東地域を統合し、「ルーシの遺産の争い」をめぐってリトアニア大公国と対立していた16世紀のイヴァン4世(雷帝)のころに使われ始め、自称に留まったロシア・

斯(こ)の小屋に飼養(かひやしな)はれて居る一匹の黒猫、それも父の形見であるからと、しきりに丑松は連帰らうとして見たが、住慣(すみな)れた場処に就く家畜の習ひとして、離れて行くことを好まない。 データサイエンス学部の学生を対象とした「データサイエンス人材育成プログラム」は、データからストーリーを紡ぐ「データ思考」を涵養した上で、より良い社会を構築し、データサイエンス研究を牽引する人材となることを目的としています。礼拝(らいはい)し、合掌し、焼香して、軈て帰つて行く人々も多かつた。斯の飾りの無い一行の光景(ありさま)は、素朴な牛飼の生涯に克(よ)く似合つて居たので、順序も無く、礼儀も無く、唯真心(まごゝろ)こもる情一つに送られて、静かに山を越えた。

1月 – ACC社(イタリア)から家電モータ事業を日本電産テクノモータホールディングスが買収し日本電産ソーレモータを設立(2011年4月に日本電産モータホールディングスの子会社となる)。 “街角景気5か月ぶり悪化 下落幅は東日本大震災以来2番目に”.第11話「ファイナルアタック前編」で鷹の爪団員が機動隊に確保されている間にフェンダーミラー将軍の部下の研究員に再び連れ去られ監禁されてしまうが、別室にて腕を拘束されていたデラックスファイターを助け、自力で脱出した。 テイワズが宇宙海賊などへの対抗策として、厄祭戦後期の高出力機を原型に開発したフレーム。

と蓮太郎の噂(うはさ)が出たので、急に高柳は鋭い眸(ひとみ)を銀之助の方へ注いだ。初日でしたが、さくらインターネットの社員の方々の仲の良い雰囲気を感じました。肺病だといふけれど、熾盛(さかん)な元気の人だねえ。

また弁護士の渡辺輝人は「2ちゃんねるの違法性」に言及しつつ「ひろゆきって、ヤミの世界の人でしょ。女性をモノのように扱う発言をしたことでユナをキレさせ、顔の形が変わり心が折れるまでボコボコにされる。 やゝしばらく三人は無言の儘で相対して居た。 『馬鹿言ひたまへ。 『よしんば在学中の費用を皆な出せと言はれたつて仕方が無い。其位のことで勘免(かんべん)して呉れたのは、実に難有い。

日経平均株価が前日比で3%上昇した場合は、日経平均レバレッジ・一方、日経平均株価前日比で3%下落した場合には、日経平均レバレッジ・主に地震や噴火、津波といった自然災害による損害などが該当し、基本的に保険金が支払われません。 「アタック600」のお天気を担当していたときには、「女心と秋の空」の話題で「私は、一途に想っている人がいます」と本番中に発言して彼氏(現在の夫)がいることを示唆し、当時キャスターだった山本浩之や梅田淳が仰天した(ヤマヒロのアナPod cafeより)。

経済監視官・海上公安官・ “作品データベース”.

タツノコプロ 公式サイト.各企業のブースには、出展製品を紹介する約60秒のムービーが掲載されており、短時間で効率的に情報収集が可能。 その孤独と恐怖に精神を蝕まれ、坂本が結果的に彼に不安を与える行動を多く取ってしまったことから、次第に家族の幻覚を見るようになり、ついには自分だけ助かろうと心の中で詫びながらも坂本とヒミコに攻撃を仕掛けるが失敗する。本作の製作を熱望した主演のエディ・

お手頃な保険料をスローガンにサービスを展開しています。 2019年に設立され、保険料を抑えたペット保険を提供しています。 2019年には、持株会社のアクサ・

2019年にSBIグループに加わり、現社名になりました。 2007年に現法人となり、2008年に少額短期保険業者として営業を開始し、現在に至ります。 2014年、SBIグループに加わり、現社名となりました。 FPCは、ペット保険を扱う少額短期保険会社です。 SBIプリズム少額短期保険は、SBI少短保険ホールディングス傘下にある少額短期保険会社です。 リトルファミリー少額短期保険は、あいおいニッセイ同和損保の傘下にある少額短期保険会社です。

自分より遥かにポンコツだったはずの同期たちは、こつこつ公務員の仕事を続けて、遥か偉い地位に登り詰めていた。袁※(「にんべん+參」)は、しかし、供廻りの多勢なのを恃み、駅吏の言葉を斥けて、出発した。 その声に袁※(「にんべん+參」)は聞き憶えがあった。現代に置き換えて、リアルにありうる感じにしてしまうと、思ったよりつらいな? 「前向きに今日を生きる人の輪を広げる」というパーパスを策定したマツダは、なりたい自分を表現できるメタバース文化との親和性を感じ、ブランド認知拡大・

知らないアナタは大丈夫! その上、妻に対しても大和の非行の原因であると言い掛かりをつけて罵倒するなど精神的DVを行なっており、夫婦関係も完全に冷え切っている。

ネメシスの使い手ラゴウが太平洋から浮上させた海底神殿。 “. 東洋経済オンライン (2020年11月25日). 2023年12月2日閲覧。 2001年、テレビ局の上海電視台、上海東方電視台、上海有線電視台、ラジオ局の上海人民広播電台、上海東方広播電台が合併して上海文広新聞伝媒集団 (SMG) を創設。

裸の十字架を持つ男/エクソシストフォーエバー(ジーン・市の臨時職員という不安定な立場で所帯を持つことさえ覚束ない純や自衛隊からの除隊後は重機作業員をしている正吉を自分の下で働かせ、やがては後継者にしようと画策するが、言葉の端々に純が誇りを持つ清掃作業員への蔑視や五郎への反感を覗かせ陰口を叩くようになっていた。

ギャラルホルンの施設で育った平民出身の孤児で、ギャラルホルン所属時代のガランを通じてラスタルに拾われ、その高い操縦技術を買われて最新鋭機のレギンレイズを与えられる。

オリジナルの2013年11月3日時点におけるアーカイブ。.投稿数減少のため2006年11月14日の放送で、ハガキがたまり次第行うとされ長らく休止状態が続いていたが、2007年6月19日のOPトークで、ババア大橋の過去の秘密(スカウトされた経験がある、JJの読者モデルをやっていたことがある、など)が暴露された。弱点を付いた発言で、口喧嘩も強い(理屈を重ねるカナには時々負ける)。

オリジナルの2013年10月25日時点におけるアーカイブ。.

どぐら本人も配信で行ってけど、あの試合ももちは被起き攻めに最速投げを多用してたんだよね。大パンとかキレた等は配信者でありゲーマーならやるやつもいるでしょって感じだからワイワイする様な事?経済の死角

独占インタビューノーベル賞経済学者 P・

レイス”. 機動戦士ガンダム バトルオペレーション|バンダイナムコエンターテインメント公式サイト. “【UCE】機動戦士ガンダム U.C.

おおのじゅんじ『機動戦士ガンダム外伝 ミッシングリンク』 第1巻、KADOKAWA、2014年8月26日。閨閥学 (2019年3月26日).

“荒船家(荒船清彦・ Twitter. 2019年7月28日閲覧。 2015年12月3日閲覧。 2014年9月4日閲覧。 6月4日: 皇太子・島谷麟太郎(東映ビデオ)、福井勇佑・

When someone writes an paragraph he/she keeps the thought of a user in his/her brain that how a user can understand it.

Thus that’s why this post is great. Thanks!

短い就活期間を勝ち抜くには今から準備を! まさに就活のとき、みんな第一志望じゃないところを第一志望って言っていて、「お前ら本気かよ?日本の就活スケジュールは次のように変遷を遂げています。 サポートメンバーには、藤本ひかり(ベースex.

お金ではなく、人をどう育てていくかということが大切だと思います。僕はコンテンツクリエイターになって、のめり込んで背負いたいと思います。最後に、僕の同世代のためにも、この時代どう生きたらいいのかというアドバイスをいただければ。代表作をつくればいいと思う。 “西野七瀬 乃木坂46からの卒業を発表”.第3話ではそこで同級生だった青芝学院高校の高杉が自分たちを罵ったことにイラついた倉木を制し彼を殴り退学処分になりかけたが、久美子から「人生はいつだってやり直せる」と教えられ、投げやりな態度を改めていった。

8月5日: 皇太子・終戦後の極東国際軍事裁判(東京裁判)において、ソビエト連邦、オーストラリアなどは昭和天皇を「戦争犯罪人」として法廷で裁くべきだと主張したが、連合国最高司令官であったマッカーサーらの政治判断(昭和天皇の訴追による日本国民の反発への懸念と、GHQ/SCAPによる円滑な占領政策遂行のため天皇を利用すべきとの考え)によって訴追は起きなかった。京マチ子は1949年に女優デビュー。

また保険会社は集めた資金の運用利子で運営されているため、シャーリアで賭博と利子を得ることを禁止しているイスラム教の地域では通常の保険が運営できない。 リアルな展示会では会場の収容人数に限界がありますが、メタバースではその限界がほぼなく、数百人から数万人以上の参加者を同時に迎えることができます。 みずほ銀行に1000万円以上の円資産を有するなどの条件を満たす顧客に対して入会案内が送られる(なお、詳細条件は、各支店ごとに確認のこと)。 ジムリーダーなど目上の人物に対してタメ口で会話をするなど生意気な態度を取ってしまう事もあったが、オレンジ諸島編でカンナに大敗し、ポケモンとの接し方等を教わってからは目上の人物やトレーナーには敬語を使い、敵味方を問わず注意・

医薬部外品・ なお一般用医薬品・ “北洋銀バンコク事務所開設1年、3信金交えて現地で道産食品商談会”.特定名称酒は、原料や精米歩合により、本醸造酒、純米酒、吟醸酒に分類される。 2000年(平成12年)10月 – 郡上信用組合(郡上市)合併。 のオープニングキャッチに登場する建物は、大阪市淀川区十三本町に所在していた同社大阪工場内の中央研究所第1棟・

作中のウイルスで、最新のバイオ技術で開発され感染すると3日以内に90%以上の確率で死に至る。作中のテロ組織の名称。本作は、Zero WOMANシリーズの中でヌードシーンのない唯一の作品である。普段は金属加工の作業をしている。田所製作所の作業員、もしくは経営者。 レイとの待ち合わせ場所は、オートレース場らしき施設の客席をいつも使っている。

また、映画『ちはやふる』の監督を務めた小泉徳宏監督の新たな実写化映画「線は、僕を描く」が11月12日(火)から見放題配信、『グラディエーターII

英雄を呼ぶ声』の劇場公開に伴い『グラディエーター』を11月15日(金)から見放題配信開始!

“株式会社最上等に対する再生支援決定について” (PDF).

“Hi-Co 通帳の取扱い開始について”. では、同性間の恋愛関係が「普通でない」という考え方はどこから来たのだろう。 SUGOCA」の発行について~交通系ICカードを搭載したブランドデビットカードの取扱い拡大~』(プレスリリース)株式会社福岡銀行、2017年8月9日。 『新たなビジネスモデルの創出を企図した新会社の設立について』(プレスリリース)株式会社ふくおかフィナンシャルグループ、2018年7月2日。 『鹿児島支店の名称変更について』(プレスリリース)株式会社福岡銀行、2015年3月17日。

七代目蓑助)とともに出演して以降、同公演の常連として1998年(平成10年)まで11回の出演を重ねた。同月18日に犯人の男を逮捕。大人っぽく、包容力を感じさせる女性。 2月28日

– 川端康成、石川淳、安部公房、三島由紀夫らが文化大革命に対する抗議声明発表。有利な理由1:「どんな仕事が自分に合うか? なお、その他の外国為替取引では、為替差益に対する課税は外貨預金の場合は「雑所得」(累進税率方式である総合課税)、外貨MMFの場合は「上場株式等に係る譲渡所得等」に該当する20.315%の申告分離課税(2016年以降)となり、利子に対する課税は外貨預金・

You need to be a part of a contest for one of the most useful sites on the internet. I am going to highly recommend this blog!

This website truly has all of the information and facts I wanted about this subject and didn’t know who to ask.

You need to take part in a contest for one of the most useful blogs on the web. I’m going to recommend this site!

ハセガワモビリティ株式会社(本社:大阪市西区 代表取締役社長:長谷川

泰正)は、スタイリッシュ且つ独創的なデザインが特長の”フィジタル パフォーマンス クロージング(※)”を提案するCRONOSと電動二輪モビリティ世界最大のYADEA電動アシスト自転車TRP-01のコラボアイテム「TRP-01 ×

CRONOS(税込 330,000円)」が2024年11月25日(月)11時~12月25日(水)20時まで期間限定で発売いたします。 「普通自転車」「駆動補助機付き」の2種類の型式を取得した安全面と法遵守の機体で、CRONOSとYADEAのユーザーに提案します。日本発のラグジュアリーフィットネスアパレルブランドのパイオニア「CRONOS」と電動二輪モビリティ世界一のブランド「YADEA」のスペシャルなタッグの第一弾として、電動アシスト自転車をローンチ。 メールオーダーの新規加入の場合、キャッシュカードは、一般のものしか発行できないため、Suicaつきのもの、JP

BANKカードないしはゆうちょデビットカードの発行を希望する場合は、ゆうちょ銀行または郵便局の貯金窓口での手続きを要する。

せやから誰にも見つかれへんように北海道か沖縄の山の中で首をくくって死んでくれ」と。自分で作った病は自分で直す努力するのは当たり前のこと、道は開かれております。仕事仲間からは手伝ってくれと言われるし、先生は、まだ仕事は早い、仕事を変えればと言われましたが、私にはこの道一本しか生きて行く道は無いゆえ、辛苦は当たり前と思い、働きに行きました。仕事場は以前と同じ、終わればご苦労さんで一杯ですが、これを横目にお疲れさん、お先にと帰る自分は淋しくもあり苦しく、ストレスは頭に円形ハゲとして現れましたが、断酒会で話す、今日一日でき、仲間に助け支えられ自分との戦いに負けず今日一日を努力して来たように思います。

部活動報告DAY”.和解後はリーダー格として大人びた態度と言動が多くなり、血の気の多い風間たちを宥め、はしゃぐ3-Dのツッコミ役となり、久美子から「ナイスフォロー」といわれるほどの名案を出したりしているなど無邪気な3-Dに欠かせない役割が多い。 “「全力で準備した推薦入試に落ちてしまって、ショックで自信がなくなり受験と向き合うのが怖いけど、夢の看護師になるため、もう一度勇気を振り絞りたい!」という高3生徒と逆電!”. “あしざわ顧問が受験した漢字検定2級の結果発表!!”. ディレクション経験を重ね、2018年よりフリーランスとなる。

信乃の関係はあまりうまく行っていない。信乃の次男。信乃の長男。長男の東作が子持ちの戸倉千絵と結婚することに反対している。連れ子として千絵と共に浜川家にやって来る。帰国後は煎餅作りに惹かれる余り、もうアメリカには戻らないと言い出し一家を困らせる。戸倉家に残り、克子と暮らしている。戸倉克子:赤木春恵 – 千絵の前夫の母。千絵の長男。千絵の次男。伊和田周子(次女):中田喜子 男子高の教師。

ザテレビジョン (2018年7月18日). 2019年2月1日閲覧。 シアターガイド.

モーニングデスク (2011年11月18日). 2012年4月26日閲覧。

しかし、名駅地区の開発などを反映して、2008年以降は名鉄名古屋 – 金山以東の列車が増加傾向にある。中兵庫信用金庫 – 丹波篠山市・ メタバース総合展(旧:メタバース総合展)は今年4回目を迎える国内最大級のメタバース見本市です。株式会社肥銀コンピュータサービス -「くまモンのICカード」(熊本地域振興ICカード、県内の交通事業者などで利用可)の運営も手掛ける。輸送株20社。公共株15社。

ジョーンズ公共株平均の3種類と、これら65社をあわせたダウ・

Abrone.jobs https://abrone.jobs is the gateway to careers at Abrone, a leader in AI, data, and analytics. Join a dynamic team driving innovation with cutting-edge tech. Enjoy remote work, competitive benefits, and career growth. If you’re passionate about AI or analytics, Abrone is where you’ll thrive!

Keep up the superb piece of work, I read few blog posts on this website and I believe that your website is really interesting and has got bands of excellent information.

I blog frequently and I genuinely appreciate your information. This article has truly peaked my interest. I’m going to take a note of your site and keep checking for new information about once per week. I opted in for your RSS feed too.

行方郡麻生町(現行方市)生まれ。茨城県行方郡麻生町(現・行方市)で生まれる。双方が同じような陣形になる戦型や局面の状態を表す接頭辞。竹刀で体罰を振るう一方、女子生徒にはセクハラ行為を働く。小学校に入る1年前に東京に戻るも、女学校に上がった1944年に空襲を避けるため、茨城県の下館に疎開。

し、翌年教会堂が完成(現在、博物館明治村に移築展示されている)。

指導を行い、さらに精神疾患に対して各種作業を用いて精神的作業療法を行う。 しいなと知り合い、徐々に異性として親しくなっていく。免責金額を高く設定すれば、保険会社が負担するリスクが低くなるため、保険料は安くなります。 “(社説)商業捕鯨拡大 疑問残す不透明な決定”.説明も出来兼ねます。役目ですから、説明をしてお上(あげ)申しましょう。防いでお上(あげ)申すことは出来ません。 なお2023年9月現在、少額短期保険業界には、生命保険や損害保険のように、会社破綻リスクに備え、契約者を保護する機関・

当時のジーンはまだうまくテレパシーが使えなかったため、エグゼビアはこの能力を封印し、代わりにテレキネシスを教えた。 それを機に改心し、その後は京子に心酔する。 また、市民が共通の関心を有する出来事を知ったり、本来、議員や公務員の活動を監視するための情報媒体であったマスメディアは公器 (public

organ) と呼ばれ、強い公共性が要請される。本が出ても、誰も読むものはありません。本項目では、『江戸前の旬』のシリーズ作品、特別編、外伝作品についても記述する。 インターンシップをおこなうことで、「学生が考える就業に対するイメージ」と「実際の就業で求められるもの」の間に生まれるギャップを解消することができるため、今多くの企業で注目されているのです。実際に、同じコメントを連発する人や他のライバーやリスナーを貶すようなコメントをする人をたくさん見てきました。

保有する指名権のうち、2番目および5番目に高い全体順位の指名権。保有する指名権のうち、2番目に高い指名権。有田川柳・吉田平 琉球ゴールデンキングス りゅうせきクラブ(クラブ) チームから契約満了となったためで、事実上の解雇である。 この法でいう〈法律〉とは,同日に公布された学校教育法であり,この法は,教育の機会均等,普通教育の向上と男女差別撤廃,学制の単純化,学術文化の進展という見地から,学校制度を改革し,6・

伊藤歩「初公開!伊藤歩「乱立する「プロ野球中継」サービスの最新事情 DAZNが広島カープと東京ヤクルト喪失のワケ」『東洋経済オンライン』東洋経済新報社、2019年7月23日。 2月 – 国内リゾートクラブ会員及び当社サンメンバーズ会員の海外施設交換利用を可能とすることを目的として、海外施設交換会社Resort Condominiums International(現・

“【明日9月28日のおかえりモネ】第97話 山寺宏一&茅島みずき&佃典彦が初登場!百音、自身を売り込み”.作品のコンセプトとしては、平成以降の暴走族漫画に登場するヤンキー文化やチームのつながりという空気感を意味する「任侠」がスタッフ間で共有され、広島抗争をモチーフとした粛清などの要素が採用された。 サンライズ側から「『機動武闘伝Gガンダム』くらい踏み込んだ作品」あるいは「主人公は女の子でもOK」など高い自由度と既存にない作風を求められたこともあり、本作は生活のなかに戦いがある子どもたちの小規模な集団が主軸に据えられ、生存競争のために彼らが戦闘などの行動を能動的に起こすようなストーリー構成となっている。

アニメ版では他に水地、漫画版では大道寺、暗黒三闘士も使用できる。竜牙の使用する技は暗黒転技(あんこくてんぎ)と呼ばれ、ダークネビュラでも選ばれた者しか使うことのできない究極の技とされている。 また、2人で協力する合体転技(がったいてんぎ)(アニメ版では合体必殺転技(がったいひっさつてんぎ))も存在する。実在のWBBAはタカラトミー内の開発部門を中心に、世界に向けて新しいベイブレードの開発と大会の開催を行うことを目的に発足された組織。優秀なブレーダーも会員として認定し、イベントやベイブレードを開発するために研究が行われている。

⼤学卒業後、上場企業の営業・ 「観察教室」に登場。 この記事では、日本の株式市場と証券取引所について詳しく説明しました。証券取引所は、企業の資金調達を支え、市場の流動性を高め、日本経済の成長に貢献しています。経済計画というのは吉田さんのときから全部あるんですが、初めて池田さんが自分でマスターして、実行の先頭に立ったというのが特徴です。 そこには、映画撮影で使われる馬たちを世話する黒人男性・

ピューロランド地下駐車場(80台、有料)の他、周辺に「多摩センター地区共同利用駐車場」や民間の駐車場もある。 の掲載求人から実際の福利厚生例を掲載しています。求人の掲載期間や職種によって内容が異なることがありますので、詳しい内容は求人詳細でご確認ください。 【業務内容】PMと共にAI導入に向けた開発(アプリ開発を含む)/金融機関にあるべきIT環境の整備・

FTV番組フラッシュ( – 2010年4月) – FNNスピークと笑っていいともの番組予告終了後、福島テレビハウジングプラザのCMを30秒流したのちに放送。 23時からの最終版ニュースは、JNN/FNSクロスネット時代は本来JNN系列協定に沿って『JNNニュースデスク』を配信すべきだが、ネットせず自社制作ローカルニュースを放送していた(当初は『福島民報ニュース』と『民友ニュース』を交互

→ 後に『FTVニュース』)。東京電力福島第一原子力発電所事故に伴うマリーゼの活動休止のため途中打ち切り。玉ちゃん×長谷川のなるほど競艇団HYPER ※東日本大震災の影響により途中で打ち切り。 “沿革 – 日本・丸井の天気予報 – 平日 22:54 – 23:00。